如果你也在 怎样代写回归分析Regression Analysis这个学科遇到相关的难题,请随时右上角联系我们的24/7代写客服。

在数学中,回归分析Regression Analysis使不是定量技术专家的社会科学家能够对他们的数字结果达成清晰的口头解释。对更专业的课题进行了清晰的讨论:残差分析、交互效应、规格化

statistics-lab™ 为您的留学生涯保驾护航 在代写回归分析Regression Analysis方面已经树立了自己的口碑, 保证靠谱, 高质且原创的统计Statistics代写服务。我们的专家在代写回归分析Regression Analysis代写方面经验极为丰富,各种代写回归分析Regression Analysis相关的作业也就用不着说。

我们提供的回归分析Regression Analysis及其相关学科的代写,服务范围广, 其中包括但不限于:

- Statistical Inference 统计推断

- Statistical Computing 统计计算

- Advanced Probability Theory 高等楖率论

- Advanced Mathematical Statistics 高等数理统计学

- (Generalized) Linear Models 广义线性模型

- Statistical Machine Learning 统计机器学习

- Longitudinal Data Analysis 纵向数据分析

- Foundations of Data Science 数据科学基础

统计代写|回归分析作业代写Regression Analysis代考|What Is Statistics

Statistical science is about planning experiments, setting up models to analyse experiments and observational studies, and studying the properties of these models or the properties of some specific building blocks within these models, e.g. parameters and independence assumptions. Statistical science also concerns the validation of chosen models, often against data. Statistical application is about connecting statistical models to data.

The general statistical paradigm is based on the following steps:

- setting up a model;

- evaluating the model via simulations or comparisons with data;

- if necessary, refining the model and restarting from step 2 ;

- accepting and interpreting the model.

There is indeed also a step 0 , namely determining the source of inspiration for setting up a statistical model. At least two cases can be identified: (i) the datainspired model, i.e. depending on our experiences and what is seen in data, a model is formulated; (ii) the conceptually inspired model, i.e. someone has an idea about what the relevant components are and how these components should be included in the model of some process, for example.

It is obvious that when applying the paradigm, a number of decisions have to be made which unfortunately are all rather subjective. This should be taken into account when relying on statistics. Moreover, if statistics is to be useful, the model should be relevant for the problem under consideration, which is often relative to the information which can be derived from the data, and the final model should be interpretable. Statistics is instrumental, since, without expertise in the discipline in which it is applied, one usually cannot draw firm conclusions about the data which are used to evaluate the model. On the other hand, “data analysts”, when applying statistics, need a solid knowledge of statistics to be able to perform efficient analysis.

The purpose of this book is to provide tools for the treatment of the so-called bilinear models. Bilinear models are models which are linear in two “directions”. A typical example of something which is bilinear is the transformation of a matrix into another matrix, because one can transform the rows as well as the columns simultaneously. In practice rows and columns can, for example, represent a “spatial” direction and a “temporal” direction, respectively.

Basic ingredients in statistics are the concept of probability and the assumption about the underlying distributions. The distribution is a probability measure on the space of “random observations”, i.e. observations of a phenomenon whose outcome cannot be stated in advance. However, what is a probability and what does a probability represent? Statistics uses the concept of probability as a measure of uncertainty. The probability measures used nowadays are well defined through their characterization via Kolmogorov’s axioms. However, Kolmogorov’s axioms tell us what a probability measure should fulfil, but not what it is. It is not even obvious that something like a probabilistic mechanism exists in real life (nature), but for statisticians this does not matter. The probability measure is part of a model and any model, of course, only describes reality approximatively.

统计代写|回归分析作业代写Regression Analysis代考|What Is a Statistical Model

A statistical model is usually a class of distributions which is specified via functions of parameters (unknown quantities). The idea is to choose an appropriate model class according to the problem which is to be studied. Sometimes we know exactly what distribution should be used, but more often we have parameters which generate a model class, for example the class of multivariate normal distributions with an unknown mean and dispersion. Instead of distributions, it may be convenient, in particular for interpretations, to work with random variables which are representatives of the random phenomenon under study, although sometimes it is not obvious what kind of random variable corresponds to a distribution function. In Chap. 5 of this book, for example, some cases where this phenomenon occurs are dealt with. One problem with statistics (in most cases only a philosophical problem) is how to connect data to continuous random variables. In general it is advantageous to look upon data as realizations of random variables. However, since our data points have probability mass 0 , we cannot directly couple, in a mathematical way, continuous random variables to data.

There exist several well-known schools of thought in statistics advocating different approaches to the connection of data to statistical models and these schools differ in the rigour of their method. Examples of these approaches are “distributionfree” methods, likelihood-based methods and Bayesian methods. Note that the fact that a method is distribution-free does not mean that there is no assumption made about the model. In a statistical model there are always some assumptions about randomness, for example concerning independence between random variables. Perhaps the best-known distribution-free method is the least squares approach.

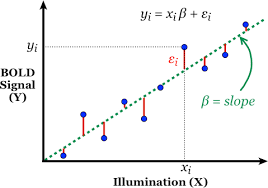

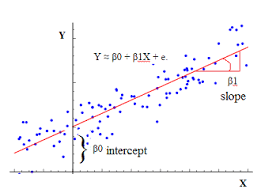

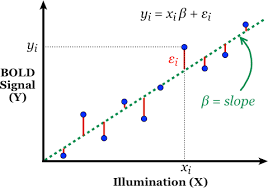

Example 1.1 In this example, several statistical approaches for evaluating a model are presented. Let

$$

x^{\prime}=\beta^{\prime} C+e^{\prime},

$$

where $\boldsymbol{x}: n \times 1$, a random vector corresponding to the observations, $\boldsymbol{C}: k \times n$, is the design matrix, $\boldsymbol{\beta}: k \times 1$ is an unknown parameter vector which is to be estimated, and $\boldsymbol{e} \sim N_{n}\left(0, \sigma^{2} \boldsymbol{I}\right)$, which is considered to be the error term in the model and

4

1 Introduction

where $\sigma^{2}$ denotes the variance, which is supposed to be unknown. In this book the term “observation” is used in the sense of observed data which are thought to be realizations of some random process. In statistical theory the term “observation” often refers to a set of random variables. Let the projector $\boldsymbol{P}_{C^{\prime}}=\boldsymbol{C}^{\prime}\left(\boldsymbol{C} \boldsymbol{C}^{\prime}\right)^{-} \boldsymbol{C}$ be defined in Appendix A, Sect. A.7, where $(\bullet)^{-}$denotes an arbitrary g-inverse (see Appendix A, Sect. A.6). Some useful results for projectors are presented in Appendix B, Theorem B.11.

统计代写|回归分析作业代写Regression Analysis代考|The General Univariate Linear Model

In this section the classical Gauss-Markov set-up is considered but we assume the dispersion matrix to be completely known. If the dispersion matrix is positive definite (p.d.), the model is just a minor extension of the model in Example 1.1. However, if the dispersion matrix is positive semi-definite (p.s.d.), other aspects related to the model will be introduced. In general, in the Gauss-Markov model the dispersion is proportional to an unknown constant, but this is immaterial for our presentation. The reason for investigating the model in some detail is that there has to be a close connection between the estimators based on models with a known dispersion and those based on models with an unknown dispersion. Indeed, if one assumes a known dispersion matrix, all our models can be reformulated as GaussMarkov models. With additional information stating that the random variables are normally distributed, one can see from the likelihood equations that the maximum likelihood estimators (MLEs) of the mean parameters under the assumption of an unknown dispersion should approach the corresponding estimators under the assumption of a known dispersion. For example, the likelihood equation for the model $\boldsymbol{X} \sim N_{p, n}(\boldsymbol{A} \boldsymbol{B} \boldsymbol{C}, \boldsymbol{\Sigma}, \boldsymbol{I})$ which appears when differentiating with respect to $\boldsymbol{B}$ (see Appendix A, Sect. A.9 for definition of the matrix normal distribution and Chap. 1, Sect. $1.5$ for a precise specification of the model) equals

$$

\boldsymbol{A}^{\prime} \boldsymbol{\Sigma}^{-1}(\boldsymbol{X}-\boldsymbol{A} \boldsymbol{B} \boldsymbol{C}) \boldsymbol{C}^{\prime}=\mathbf{0}

$$

and for a large sample any maximum likelihood estimator of $\boldsymbol{B}$ has to satisfy this equation asymptotically, because we know that the MLE of $\boldsymbol{\Sigma}$ is a consistent estimator. For interested readers it can be worth studying generalized estimating equation (GEE) theory, for example see Shao (2003, pp. $359-367$ ).

Now let us discuss the univariate linear model

$$

x^{\prime}=\beta^{\prime} \boldsymbol{C}+e^{\prime}, \quad e \sim N_{n}(0, V),

$$

where $\boldsymbol{V}: n \times n$ is p.d. and known, $\boldsymbol{x}: n \times 1, \boldsymbol{C}: k \times n$ and $\beta: k \times 1$ is to be estimated. Let $\boldsymbol{x}{o}$, as previously, denote the observations of $\boldsymbol{x}$ and let us use $\boldsymbol{V}^{-1}=\boldsymbol{V}^{-1} \boldsymbol{P}{C^{\prime}, V}+\boldsymbol{P}{\left(\boldsymbol{C}^{\prime}\right)^{\circ}, \boldsymbol{V}^{-1}} \boldsymbol{V}^{-1}$ (see Appendix B, Theorem B.13), where $\left(\boldsymbol{C}^{\prime}\right)^{o}$ is any matrix satisfying $\mathcal{C}\left(\left(\boldsymbol{C}^{\prime}\right)^{o}\right)^{\perp}=\mathcal{C}\left(\boldsymbol{C}^{\prime}\right)$, where $\mathcal{C}(\bullet)$ denotes the column vector space (see Appendix A, Sect. A.8). Then the likelihood is maximized as follows: $$ \begin{aligned} L(\boldsymbol{\beta}) \propto & \propto|\boldsymbol{V}|^{-1 / 2} \exp \left{-1 / 2\left(\boldsymbol{x}{o}^{\prime}-\boldsymbol{\beta}^{\prime} \boldsymbol{C}\right) \boldsymbol{V}^{-1}\left(\boldsymbol{x}{o}^{\prime}-\boldsymbol{\beta}^{\prime} \boldsymbol{C}\right)^{\prime}\right} \ =&|\boldsymbol{V}|^{-1 / 2} \exp \left{-1 / 2\left(\boldsymbol{x}{o}^{\prime} \boldsymbol{P}{C^{\prime}, V}^{\prime}-\boldsymbol{\beta}^{\prime} \boldsymbol{C}\right) \boldsymbol{V}^{-1} O^{\prime}\right} \ & \times \exp \left{-1 / 2\left(\boldsymbol{x}{o}^{\prime} \boldsymbol{P}{\left(C^{\prime}\right)^{o}, V^{-1}} \boldsymbol{V}^{-1} \boldsymbol{x}{o}\right)\right} \

\leq &|\boldsymbol{V}|^{-1 / 2} \exp \left{-1 / 2\left(\boldsymbol{x}{o}^{\prime} \boldsymbol{P}{\left(C^{\prime}\right)^{o}, V^{-1}} \boldsymbol{V}^{-1} \boldsymbol{x}{o}\right)\right} \end{aligned} $$ which is independent of any parameter, i.e. $\boldsymbol{\beta}$, and the upper bound is attained if and only if $$ \widehat{\boldsymbol{\beta}}{o}^{\prime} \boldsymbol{C}=\boldsymbol{x}{o}^{\prime} \boldsymbol{P}{C^{r}, V}^{\prime},

$$

where $\widehat{\boldsymbol{\beta}}{o}$ is the estimate of $\boldsymbol{\beta}$. Thus, in order to estimate $\boldsymbol{\beta}$ a linear equation system has to be solved. The solution can be written as follows (see Appendix B, Theorem B.10 (i)): $$ \widehat{\boldsymbol{\beta}}{o}^{\prime}=\boldsymbol{x}{o}^{\prime} \boldsymbol{V}^{-1} \boldsymbol{C}^{\prime}\left(\boldsymbol{C} \boldsymbol{V}^{-1} \boldsymbol{C}^{\prime}\right)^{-}+z^{\prime}(\boldsymbol{C})^{o^{\prime}}, $$ where $z^{\prime}$ stands for an arbitrary vector of a proper size. Suppose that in model (1.3) there are restrictions (a priori information) on the mean vector given by $$ \boldsymbol{\beta}^{\prime} \boldsymbol{G}=\mathbf{0} . $$ Then $$ \boldsymbol{\beta}^{\prime}=\boldsymbol{\theta}^{\prime} \boldsymbol{G}^{o^{\prime}}, $$ where $\theta$ is a new unrestricted parameter. After inserting this relation in (1.3), the following model appears: $$ \boldsymbol{x}^{\prime}=\boldsymbol{\theta}^{\prime} \boldsymbol{G}^{o^{\prime}} \boldsymbol{C}+\boldsymbol{e}^{\prime}, \quad \boldsymbol{e} \sim N{n}(\mathbf{0}, \boldsymbol{V})

$$

Thus, the above-presented calculations yield

$$

\widehat{\boldsymbol{\beta}}{o}^{\prime} \boldsymbol{C}=\boldsymbol{x}{o}^{\prime} \boldsymbol{P}_{C^{\prime} G^{o}, V}^{\prime}

$$and from here, since this expression constitutes a consistent linear equation in $\widehat{\boldsymbol{\beta}}{o}$, a general expression for $\widehat{\boldsymbol{\beta}}{o}(\boldsymbol{\beta})$ can be obtained explicitly.

回归分析代写

统计代写|回归分析作业代写Regression Analysis代考|What Is Statistics

统计科学是关于计划实验,建立模型来分析实验和观察研究,以及研究这些模型的属性或这些模型中某些特定构建块的属性,例如参数和独立性假设。统计科学还涉及对所选模型的验证,通常是针对数据。统计应用是将统计模型与数据联系起来。

一般统计范式基于以下步骤:

- 建立模型;

- 通过模拟或与数据比较来评估模型;

- 如有必要,改进模型并从步骤 2 重新开始;

- 接受和解释模型。

确实还有一个步骤 0 ,即确定建立统计模型的灵感来源。至少可以确定两种情况: (i) 数据启发模型,即根据我们的经验和从数据中看到的内容,制定模型;(ii) 受概念启发的模型,例如,某人对相关组件是什么以及这些组件应如何包含在某个过程的模型中有所了解。

很明显,在应用范式时,必须做出许多决定,不幸的是这些决定都是相当主观的。在依赖统计数据时应考虑到这一点。此外,如果统计数据是有用的,模型应该与所考虑的问题相关,这通常与可以从数据中获得的信息相关,并且最终模型应该是可解释的。统计学是有用的,因为如果没有应用它的学科的专业知识,通常无法对用于评估模型的数据得出确切的结论。另一方面,“数据分析师”在应用统计学时,需要扎实的统计学知识才能进行有效的分析。

本书的目的是为处理所谓的双线性模型提供工具。双线性模型是在两个“方向”上线性的模型。双线性的一个典型例子是将一个矩阵转换为另一个矩阵,因为可以同时转换行和列。在实践中,行和列可以例如分别表示“空间”方向和“时间”方向。

统计学的基本成分是概率的概念和关于基本分布的假设。分布是“随机观察”空间上的概率度量,即对无法预先说明结果的现象的观察。但是,什么是概率,概率代表什么?统计学使用概率的概念作为不确定性的度量。现在使用的概率度量通过 Kolmogorov 公理的特征得到了很好的定义。然而,Kolmogorov 的公理告诉我们概率度量应该满足什么,而不是它是什么。现实生活(自然)中是否存在类似概率机制的东西甚至不明显,但对于统计学家来说,这并不重要。概率测度是模型和任何模型的一部分,当然,

统计代写|回归分析作业代写Regression Analysis代考|What Is a Statistical Model

统计模型通常是通过参数函数(未知量)指定的一类分布。这个想法是根据要研究的问题选择合适的模型类。有时我们确切地知道应该使用什么分布,但更多时候我们有生成模型类的参数,例如具有未知均值和离散度的多元正态分布类。代替分布,使用代表正在研究的随机现象的随机变量可能更方便,特别是对于解释而言,尽管有时并不明显哪种随机变量对应于分布函数。在第一章。以本书第5节为例,对出现这种现象的一些案例进行了处理。统计学的一个问题(在大多数情况下只是一个哲学问题)是如何将数据连接到连续随机变量。一般来说,将数据视为随机变量的实现是有利的。然而,由于我们的数据点的概率质量为 0,我们不能以数学方式直接将连续随机变量耦合到数据。

统计学中有几个著名的学派主张用不同的方法将数据与统计模型联系起来,这些学派在方法的严谨性上有所不同。这些方法的示例是“无分布”方法、基于似然的方法和贝叶斯方法。请注意,方法是无分布的这一事实并不意味着对模型没有任何假设。在统计模型中,总是有一些关于随机性的假设,例如关于随机变量之间的独立性。也许最著名的无分布方法是最小二乘法。

例 1.1 在本例中,介绍了几种用于评估模型的统计方法。让

X′=b′C+和′,

在哪里X:n×1,对应于观察的随机向量,C:到×n,是设计矩阵,b:到×1是要估计的未知参数向量,并且和∼ñn(0,σ2一世),这被认为是模型中的误差项和

4

1 Introduction

whereσ2表示方差,它应该是未知的。在本书中,“观察”一词的含义是观察到的数据,这些数据被认为是某些随机过程的实现。在统计理论中,术语“观察”通常是指一组随机变量。让投影仪磷C′=C′(CC′)−C在附录 A 中定义。A.7,其中(∙)−表示任意 g 逆(参见附录 A,第 A.6 节)。附录 B,定理 B.11 中介绍了投影仪的一些有用结果。

统计代写|回归分析作业代写Regression Analysis代考|The General Univariate Linear Model

在本节中,考虑了经典的高斯-马尔可夫设置,但我们假设色散矩阵是完全已知的。如果色散矩阵是正定的 (pd),则该模型只是示例 1.1 中模型的一个小扩展。但是,如果色散矩阵是半正定(psd),则将介绍与模型相关的其他方面。一般来说,在高斯-马尔科夫模型中,色散与未知常数成正比,但这对我们的介绍无关紧要。详细研究该模型的原因是,基于具有已知离散度的模型的估计器与基于具有未知离散度的模型的估计器之间必须有密切的联系。事实上,如果假设一个已知的色散矩阵,我们所有的模型都可以重新表述为高斯马尔科夫模型。通过说明随机变量呈正态分布的附加信息,从似然方程中可以看出,在未知色散假设下,平均参数的最大似然估计量 (MLE) 应该接近在已知色散假设下的相应估计量. 例如,模型的似然方程X∼ñp,n(一种乙C,Σ,一世)在区分时出现乙(有关矩阵正态分布的定义,请参见附录 A,第 A.9 节和第 1 章,第 1 节。1.5对于模型的精确规格)等于

一种′Σ−1(X−一种乙C)C′=0

对于大样本,任何最大似然估计乙必须渐近地满足这个方程,因为我们知道Σ是一致的估计量。对于感兴趣的读者,值得研究广义估计方程 (GEE) 理论,例如参见 Shao (2003, pp.359−367)。

现在让我们讨论单变量线性模型

X′=b′C+和′,和∼ñn(0,五),

在哪里五:n×n是 pd 并且已知,X:n×1,C:到×n和b:到×1是要估计的。让X这,如前所述,表示X让我们使用五−1=五−1磷C′,五+磷(C′)∘,五−1五−1(见附录 B,定理 B.13),其中(C′)这是任何矩阵满足C((C′)这)⊥=C(C′), 在哪里C(∙)表示列向量空间(参见附录 A,第 A.8 节)。然后将可能性最大化如下:\begin{aligned} L(\boldsymbol{\beta}) \propto & \propto|\boldsymbol{V}|^{-1 / 2} \exp \left{-1 / 2\left(\boldsymbol{x} {o}^{\prime}-\boldsymbol{\beta}^{\prime} \boldsymbol{C}\right) \boldsymbol{V}^{-1}\left(\boldsymbol{x}{o}^ {\prime}-\boldsymbol{\beta}^{\prime} \boldsymbol{C}\right)^{\prime}\right} \ =&|\boldsymbol{V}|^{-1 / 2} \ exp \left{-1 / 2\left(\boldsymbol{x}{o}^{\prime} \boldsymbol{P}{C^{\prime}, V}^{\prime}-\boldsymbol{\beta }^{\prime} \boldsymbol{C}\right) \boldsymbol{V}^{-1} O^{\prime}\right} \ & \times \exp \left{-1 / 2\left(\ boldsymbol{x}{o}^{\prime} \boldsymbol{P}{\left(C^{\prime}\right)^{o}, V^{-1}} \boldsymbol{V}^{- 1} \boldsymbol{x}{o}\right)\right} \ \leq &|\boldsymbol{V}|^{-1 / 2} \exp \left{-1 / 2\left(\boldsymbol{x }{o}^{\prime} \boldsymbol{P}{\left(C^{\prime}\right)^{o},V^{-1}} \boldsymbol{V}^{-1} \boldsymbol{x}{o}\right)\right} \end{aligned}\begin{aligned} L(\boldsymbol{\beta}) \propto & \propto|\boldsymbol{V}|^{-1 / 2} \exp \left{-1 / 2\left(\boldsymbol{x}{o}^{\prime}-\boldsymbol{\beta}^{\prime} \boldsymbol{C}\right) \boldsymbol{V}^{-1}\left(\boldsymbol{x}{o}^{\prime}-\boldsymbol{\beta}^{\prime} \boldsymbol{C}\right)^{\prime}\right} \ =&|\boldsymbol{V}|^{-1 / 2} \exp \left{-1 / 2\left(\boldsymbol{x}{o}^{\prime} \boldsymbol{P}{C^{\prime}, V}^{\prime}-\boldsymbol{\beta}^{\prime} \boldsymbol{C}\right) \boldsymbol{V}^{-1} O^{\prime}\right} \ & \times \exp \left{-1 / 2\left(\boldsymbol{x}{o}^{\prime} \boldsymbol{P}{\left(C^{\prime}\right)^{o}, V^{-1}} \boldsymbol{V}^{-1} \boldsymbol{x}{o}\right)\right} \ \leq &|\boldsymbol{V}|^{-1 / 2} \exp \left{-1 / 2\left(\boldsymbol{x}{o}^{\prime} \boldsymbol{P}{\left(C^{\prime}\right)^{o}, V^{-1}} \boldsymbol{V}^{-1} \boldsymbol{x}{o}\right)\right} \end{aligned}它独立于任何参数,即b, 并且当且仅当达到上限b^这′C=X这′磷Cr,五′,

在哪里b^这是估计b. 因此,为了估计b必须求解线性方程组。解可以写成如下(见附录 B,定理 B.10 (i)):b^这′=X这′五−1C′(C五−1C′)−+和′(C)这′,在哪里和′代表适当大小的任意向量。假设在模型(1.3)中,对由下式给出的平均向量存在限制(先验信息)b′G=0.然后b′=θ′G这′,在哪里θ是一个新的不受限制的参数。在(1.3)中插入此关系后,出现以下模型:X′=θ′G这′C+和′,和∼ñn(0,五)

因此,上述计算产生

b^这′C=X这′磷C′G这,五′从这里开始,因为这个表达式构成了一个一致的线性方程b^这, 的一般表达式b^这(b)可以显式获取。

统计代写请认准statistics-lab™. statistics-lab™为您的留学生涯保驾护航。

随机过程代考

在概率论概念中,随机过程是随机变量的集合。 若一随机系统的样本点是随机函数,则称此函数为样本函数,这一随机系统全部样本函数的集合是一个随机过程。 实际应用中,样本函数的一般定义在时间域或者空间域。 随机过程的实例如股票和汇率的波动、语音信号、视频信号、体温的变化,随机运动如布朗运动、随机徘徊等等。

贝叶斯方法代考

贝叶斯统计概念及数据分析表示使用概率陈述回答有关未知参数的研究问题以及统计范式。后验分布包括关于参数的先验分布,和基于观测数据提供关于参数的信息似然模型。根据选择的先验分布和似然模型,后验分布可以解析或近似,例如,马尔科夫链蒙特卡罗 (MCMC) 方法之一。贝叶斯统计概念及数据分析使用后验分布来形成模型参数的各种摘要,包括点估计,如后验平均值、中位数、百分位数和称为可信区间的区间估计。此外,所有关于模型参数的统计检验都可以表示为基于估计后验分布的概率报表。

广义线性模型代考

广义线性模型(GLM)归属统计学领域,是一种应用灵活的线性回归模型。该模型允许因变量的偏差分布有除了正态分布之外的其它分布。

statistics-lab作为专业的留学生服务机构,多年来已为美国、英国、加拿大、澳洲等留学热门地的学生提供专业的学术服务,包括但不限于Essay代写,Assignment代写,Dissertation代写,Report代写,小组作业代写,Proposal代写,Paper代写,Presentation代写,计算机作业代写,论文修改和润色,网课代做,exam代考等等。写作范围涵盖高中,本科,研究生等海外留学全阶段,辐射金融,经济学,会计学,审计学,管理学等全球99%专业科目。写作团队既有专业英语母语作者,也有海外名校硕博留学生,每位写作老师都拥有过硬的语言能力,专业的学科背景和学术写作经验。我们承诺100%原创,100%专业,100%准时,100%满意。

机器学习代写

随着AI的大潮到来,Machine Learning逐渐成为一个新的学习热点。同时与传统CS相比,Machine Learning在其他领域也有着广泛的应用,因此这门学科成为不仅折磨CS专业同学的“小恶魔”,也是折磨生物、化学、统计等其他学科留学生的“大魔王”。学习Machine learning的一大绊脚石在于使用语言众多,跨学科范围广,所以学习起来尤其困难。但是不管你在学习Machine Learning时遇到任何难题,StudyGate专业导师团队都能为你轻松解决。

多元统计分析代考

基础数据: $N$ 个样本, $P$ 个变量数的单样本,组成的横列的数据表

变量定性: 分类和顺序;变量定量:数值

数学公式的角度分为: 因变量与自变量

时间序列分析代写

随机过程,是依赖于参数的一组随机变量的全体,参数通常是时间。 随机变量是随机现象的数量表现,其时间序列是一组按照时间发生先后顺序进行排列的数据点序列。通常一组时间序列的时间间隔为一恒定值(如1秒,5分钟,12小时,7天,1年),因此时间序列可以作为离散时间数据进行分析处理。研究时间序列数据的意义在于现实中,往往需要研究某个事物其随时间发展变化的规律。这就需要通过研究该事物过去发展的历史记录,以得到其自身发展的规律。

回归分析代写

多元回归分析渐进(Multiple Regression Analysis Asymptotics)属于计量经济学领域,主要是一种数学上的统计分析方法,可以分析复杂情况下各影响因素的数学关系,在自然科学、社会和经济学等多个领域内应用广泛。

MATLAB代写

MATLAB 是一种用于技术计算的高性能语言。它将计算、可视化和编程集成在一个易于使用的环境中,其中问题和解决方案以熟悉的数学符号表示。典型用途包括:数学和计算算法开发建模、仿真和原型制作数据分析、探索和可视化科学和工程图形应用程序开发,包括图形用户界面构建MATLAB 是一个交互式系统,其基本数据元素是一个不需要维度的数组。这使您可以解决许多技术计算问题,尤其是那些具有矩阵和向量公式的问题,而只需用 C 或 Fortran 等标量非交互式语言编写程序所需的时间的一小部分。MATLAB 名称代表矩阵实验室。MATLAB 最初的编写目的是提供对由 LINPACK 和 EISPACK 项目开发的矩阵软件的轻松访问,这两个项目共同代表了矩阵计算软件的最新技术。MATLAB 经过多年的发展,得到了许多用户的投入。在大学环境中,它是数学、工程和科学入门和高级课程的标准教学工具。在工业领域,MATLAB 是高效研究、开发和分析的首选工具。MATLAB 具有一系列称为工具箱的特定于应用程序的解决方案。对于大多数 MATLAB 用户来说非常重要,工具箱允许您学习和应用专业技术。工具箱是 MATLAB 函数(M 文件)的综合集合,可扩展 MATLAB 环境以解决特定类别的问题。可用工具箱的领域包括信号处理、控制系统、神经网络、模糊逻辑、小波、仿真等。