计算机代写|密码学与网络安全代写cryptography and network security代考|CS499

如果你也在 怎样代写密码学与网络安全cryptography and network security这个学科遇到相关的难题,请随时右上角联系我们的24/7代写客服。

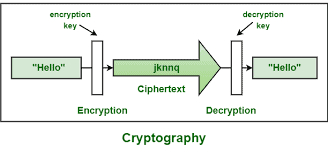

密码学是对安全通信技术的研究,它只允许信息的发送者和预定接收者查看其内容。

statistics-lab™ 为您的留学生涯保驾护航 在代写密码学与网络安全cryptography and network security方面已经树立了自己的口碑, 保证靠谱, 高质且原创的统计Statistics代写服务。我们的专家在代写密码学与网络安全cryptography and network security代写方面经验极为丰富,各种代写密码学与网络安全cryptography and network security相关的作业也就用不着说。

我们提供的密码学与网络安全cryptography and network security及其相关学科的代写,服务范围广, 其中包括但不限于:

- Statistical Inference 统计推断

- Statistical Computing 统计计算

- Advanced Probability Theory 高等概率论

- Advanced Mathematical Statistics 高等数理统计学

- (Generalized) Linear Models 广义线性模型

- Statistical Machine Learning 统计机器学习

- Longitudinal Data Analysis 纵向数据分析

- Foundations of Data Science 数据科学基础

计算机代写|密码学与网络安全代写cryptography and network security代考|Prefix Codes

For a prefix code, no codeword is a prefix, of the first part, of another codeword. Therefore, the code shown in Table $3.7$ is a prefix. On the other hand, the code shown in Table $3.8$ is not the prefix because the binary word 10 , for instance, is a prefix for the codeword 100.

To decode a sequence of binary words produced by a prefix encoder, the decoder hegins at the first hinary digit of the sequence, and decodes a codeword at a time. It is similar to a decision tree, which is a representation of the codewords of a given source code.

Figure $3.3$ illustrates the decision tree for the prefix code pointed in Table 3.9.

The tree has one initial state and four final states, which correspond to the symbols $x_1, x_2$, and $x_3$. From the initial state, for each received bit, the decoder searches the tree until a final state is found.

The decoder then emits a corresponding decoded symbol and returns to the initial state. Therefore, from the initial state, after receiving a 1 , the source decoder decodes symbol $x_1$ and returns to the initial state. If it receives a 0 , the decoder moves to the lower part of the tree; in the following, after receiving another 0 , the decoder moves further to the lower part of the tree and, after receiving a 1 , the decoder retrieves $x_2$ and returns to the initial state.

Considering the code from Table $3.9$, with the decoding tree from Figure 3.9, the binary sequence 011100010010100101 is decoded into the output sequence $x_1 x_0 x_0 x_3 x_0 x_2 x_1 x_2 x_1$.

By construction, a prefix code is always unequivocally decodable, which is important to avoid any confusion at the receiver.

Consider a code that has been constructed for a discrete source with alphabet $\left{x_1, x_2, \ldots, x_K\right}$. Let $\left{p_1, p_1, \ldots, p_K\right}$ be the source statistics and $l_k$ be the codeword length for symbol $x_k, k=1, \ldots, K$. If the binary code constructed for the source is a prefix one, then one can use the Kraft-McMillan inequality

$$

\sum_{k=1}^K 2^{-l_k} \leq 1,

$$

in which factor 2 is the radix, or number of symbols, of the binary alphabet.

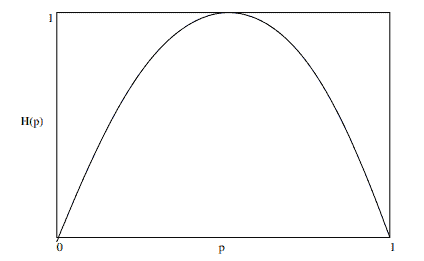

For a memoryless discrete source with entropy $H(X)$, the codeword average length of a prefix code is limited to

$$

H(X) \leq \bar{L}<H(X)+1

$$

The left-hand side equality is obtained on the condition that symbol $x_k$ be emitted from the source with probability $p_k=2^{-l_k}$, in which $l_k$ is the length of the codeword assigned to symbol $x_k$.

计算机代写|密码学与网络安全代写cryptography and network security代考|The Information Unit

There is some confusion between the binary digit, abbreviated as bit, and the information particle, also baptized as bit by John Tukey and Claude Shannon.

In a meeting of the Institute of Electrical and Electronics Engineers (IEEE), the largest scientific institution in the world, the author of this book proposed the shannon [Sh] as a unit of information transmission, which is equivalent to bit per second. It is instructive to say that the bit, as used today, is not a unit of information because it is not approved by the International System of Units (SI).

What is curious about that meeting was the misunderstanding that surrounded the units, in particular, regarding the difference between the concepts of information unit and digital logic unit (Alencar, 2007).

To make things clear, the binary digit is associated with a certain state of a digital system, and not to information. A binary digit “1” can refer to 5 volts, in TTL logic, or 12 volts, for CMOS logic.

The information bit exists independent of any association with a particular voltage level. It can be associated, for example, with a discrete information or with the quantization of an analog information.

For instance, the information bits recorded on the surface of a compact disk are stored as a series of depressions on the plastic material, which are read by an optical beam, generated by a semiconductor laser. But, obviously, the depressions are not the information. They represent a means for the transmission of information, a material substrate that carries the data.

In the same way, the information can exist, even if it is not associated with light or other electromagnetic radiation. It can be transported by several means, including paper, and materializes itself when it is processed by a computer or by a human being.

密码学与网络安全代考

计算机代写|密码学与网络安全代写cryptography and network security代考|Prefix Codes

对于前缀码,没有一个码字是另一个码字的第一部分的前缀。因此,表$3.7$中显示的代码是一个前缀。另一方面,表$3.8$中显示的代码不是前缀,因为二进制字10,例如,是码字100的前缀

要解码由前缀编码器产生的二进制字序列,解码器从序列的第一个零位开始,每次解码一个码字。它类似于决策树,决策树是给定源代码的码字的表示

图$3.3$说明了表3.9中所指向的前缀代码的决策树

树有一个初始状态和四个最终状态,分别对应符号$x_1, x_2$和$x_3$。从初始状态开始,对于每个接收到的比特,解码器搜索树,直到找到最终状态

然后解码器发出相应的已解码符号并返回初始状态。因此,从初始状态开始,在接收到1后,源解码器对符号$x_1$进行解码,并返回到初始状态。如果它接收到0,则解码器移动到树的较低部分;在接下来的代码中,在接收到另一个0之后,解码器进一步移动到树的较低部分,在接收到1之后,解码器检索$x_2$并返回到初始状态。

考虑表$3.9$中的代码,使用图3.9中的解码树,将二进制序列011100010010100101解码为输出序列$x_1 x_0 x_0 x_3 x_0 x_2 x_1 x_2 x_1$

通过构造,前缀码总是明确可解码的,这对于避免接收端产生任何混淆是很重要的

考虑一个为字母$\left{x_1, x_2, \ldots, x_K\right}$的离散源构造的代码。设$\left{p_1, p_1, \ldots, p_K\right}$为源统计数据,$l_k$为符号$x_k, k=1, \ldots, K$的码字长度。如果为源代码构造的二进制代码是前缀1,那么可以使用Kraft-McMillan不等式

$$

\sum_{k=1}^K 2^{-l_k} \leq 1,

$$

,其中因子2是二进制字母表的基数或符号数。对于熵为$H(X)$的无记忆离散源,前缀码的码字平均长度被限制在

$$

H(X) \leq \bar{L}<H(X)+1

$$

,在符号$x_k$以$p_k=2^{-l_k}$的概率从源发射的条件下得到左侧等式,其中$l_k$是分配给符号$x_k$的码字长度

计算机代写|密码学与网络安全代写cryptography and network security代考|The Information Unit

.信息单元

二进制数字(简称bit)和信息粒子(John Tukey和Claude Shannon也称bit)之间存在一些混淆。在世界上最大的科学机构——电气与电子工程师协会(IEEE)的一次会议上,本书的作者提出香农[Sh]作为信息传输单位,相当于比特/秒。今天使用的位不是信息单位,因为它没有得到国际单位制(SI)的批准

关于那次会议,令人好奇的是围绕着单元的误解,特别是关于信息单元和数字逻辑单元概念之间的差异(Alencar, 2007)

为了说明问题,二进制数字与数字系统的某种状态有关,而与信息无关。二进制数字“1”可以指5伏(在TTL逻辑中)或12伏(在CMOS逻辑中)

信息位的存在独立于任何与特定电压水平的关联。例如,它可以与离散信息或模拟信息的量化相关联

例如,记录在光盘表面的信息位被存储为塑料材料上的一系列凹坑,由半导体激光器产生的光束读取。但是,很明显,抑郁并不是信息。它们代表一种信息传输的手段,一种承载数据的材料基板

以同样的方式,信息可以存在,即使它与光或其他电磁辐射无关。它可以通过多种方式运输,包括纸张,当它被计算机或人类处理时,它就变成了现实