如果你也在 怎样代写强化学习reinforence learning这个学科遇到相关的难题,请随时右上角联系我们的24/7代写客服。

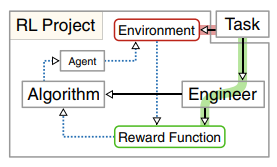

强化学习是一种基于奖励期望行为和/或惩罚不期望行为的机器学习训练方法。一般来说,强化学习代理能够感知和解释其环境,采取行动并通过试验和错误学习。

statistics-lab™ 为您的留学生涯保驾护航 在代写强化学习reinforence learning方面已经树立了自己的口碑, 保证靠谱, 高质且原创的统计Statistics代写服务。我们的专家在代写强化学习reinforence learning代写方面经验极为丰富,各种代写强化学习reinforence learning相关的作业也就用不着说。

我们提供的强化学习reinforence learning及其相关学科的代写,服务范围广, 其中包括但不限于:

- Statistical Inference 统计推断

- Statistical Computing 统计计算

- Advanced Probability Theory 高等概率论

- Advanced Mathematical Statistics 高等数理统计学

- (Generalized) Linear Models 广义线性模型

- Statistical Machine Learning 统计机器学习

- Longitudinal Data Analysis 纵向数据分析

- Foundations of Data Science 数据科学基础

机器学习代写|强化学习project代写reinforence learning代考|Reward Shaping

Many interesting problems are most naturally characterized by a sparse reward signal. Evolution, discussed in 2.1, provides an extreme example. In many cases, it is more intuitive to describe a task completion requirement by a set of constraints rather than by a dense reward function. In such environments, the easiest way to construct a reward signal would be to give a reward on each transition that leads to all constraints being fulfilled.

Theoretically, a general purpose reinforcement learning algorithm should be able to deal with the sparse reward setting. For example, Q-learning [17] is one of the few algorithms that comes with a guarantee that it will eventually find the optimal policy provided all states and actions are experienced infinitely often. However, from a practical standpoint, finding a solution may be infeasible in many sparse reward environments. Stanton and Clune [15] point out that sparse environments require a “prohibitively large” number of training steps to solve with undirected exploration, such as, e.g., $\varepsilon$-greedy exploration employed in Q-learning and other RL algorithms.

A promising direction for tackling sparse reward problems is through curiositybased exploration. Curiosity is a form of intrinsic motivation, and there is a large body of literature devoted to this topic. We discuss curiosity-based learning in more detail in Sect. 5. Despite significant progress in the area of intrinsic motivation, no strong optimality guarantees, matching those for classical RL algorithms, have been established so far. Therefore, in the next section, we take a look at an alternative direction, aimed at modifying the reward signal in such a way that the optimal policy stays invariant but the learning process can be greatly facilitated.

The term “shaping” was originally introduced in behavioral science by Skinner [13]. Shaping is sometimes also called “successive approximation”. $\mathrm{Ng}$ et al. [8] formalized the term and popularized it under the name “reward shaping” in reinforcement learning. This section details the connection between the behavioral science definition and the reinforcement learning definition, highlighting the advantages and disadvantages of reward shaping for improving exploration in sparse reward settings.

机器学习代写|强化学习project代写reinforence learning代考|Shaping in Behavioral Science

Skinner laid down the groundwork on shaping in his book [13], where he aimed at establishing the “laws of behavior”. Initially, he found out by accident that learning of certain tasks can be sped up by providing intermediate rewards. Later, he figured out that it is the discontinuities in the operands (units of behavior) that are responsible for making tasks with sparse rewards harder to learn. By breaking down these discountinuities via successive approximation (a.k.a. shaping), he could show that a desired behavior can be taught much more efficiently.

It is remarkable that many of the experimental means described by Skinner [13] cohere to the method of potential fields introduced into reinforcement learning by Ng et al. [8] 46 years later. For example, Skinner employed angle- and position-based rewards to lead a pigeon into a goal region where a big final reward was awaiting. Interestingly, in addition to manipulating the reward, Skinner was able to further speed up pigeon training by manipulating the emvironment. By keeping a pigeon hungry prior to the experiment, he could make the pigeon peck at a switch with higher probability, getting to the reward attached to that behavior more quickly.

Since manipulating the environment is often outside engineer’s control in realworld applications of reinforcement learning, reward shaping techniques provide the main practical tool for modulating the learning process. The following section takes a closer look at the theory of reward shaping in reinforcement learning and highlights its similarities and distinctions from shaping in behavioral science.

机器学习代写|强化学习project代写reinforence learning代考|Reward Shaping in Reinforcement Learning

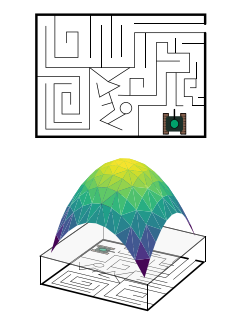

Although practitioners have always engaged in some form of reward shaping, the first theoretically grounded framework has been put forward by Ng et al. [8] under the name potential-based reward shaping $(P B R S)$. According to this theory, the shaping signal $F$ must be a function of the current state and the next state, i.e., $F: \mathcal{S} \times$ $\mathcal{S} \rightarrow \mathbb{R}$. The shaping signal is added to the original reward function to yield the

new reward signal $R^{\prime}\left(s, a, s^{\prime}\right)=R\left(s, a, s^{\prime}\right)+F\left(s, s^{\prime}\right)$. Crucially, the reward shaping term $F\left(s, s^{\prime}\right)$ must admit a representation through a potential function $\Phi(s)$ that only depends on one argument. The dependence takes the form of a difference of potentials

$$

F\left(s, s^{\prime}\right)=\gamma \Phi\left(s^{\prime}\right)-\Phi(s) .

$$

When condition (1) is violated, undesired effects should be expected. For example, in [9], a term rewarding closeness to the goal was added to a bicycle-riding task where the objective is to drive to a target location. As a result, the agent learned to drive in circles around the starting point. Since driving away from the goal is not punished, such policy is indeed optimal. A potential-based shaping term (1) would discourage such cycling solutions.

Comparing Skinner’s shaping approach from Sect. $4.1$ to PRBS, the reward provided to the pigeon for turning or moving in the right direction can be seen as arising from a potential field based on the direction and distance to the goal. From the description in [13], however, it is not clear whether also punishments (i.e., negative rewards) were given for moving in a wrong direction. If not, then Skinner’s pigeons must have suffered from the same problem as the cyclist in [9]. However, such behavior was not observed, which could be attributed to the animals having a low discount factor or receiving an internal punishment for energy expenditure.

Despite its appeal, some researchers view reward shaping quite negatively. As stated in [9], reward shaping goes against the “tabula rasa” ideal-demanding that the agent learns from scratch using a general (model-free RL) algorithm-by infusing prior knowledge into the problem. Sutton and Barto [16, p. 54] support this view, stating that

the reward signal is not the place to impart to the agent prior knowledge about how to achieve what we want it to do.

As an example in the following subsection shows, it is also often quite hard to come up with a good shaping reward. In particular, the shaping term $F\left(s, s^{\prime}\right)$ is problemspecific, and for each choice of the reward $R\left(s, a, s^{\prime}\right)$ needs to be devised anew. In Sect. 5, we consider more general shaping approaches that only depend on the environment dynamics and not the reward function.

强化学习代写

机器学习代写|强化学习project代写reinforence learning代考|Reward Shaping

许多有趣的问题最自然地以稀疏的奖励信号为特征。2.1 中讨论的进化提供了一个极端的例子。在许多情况下,通过一组约束而不是密集的奖励函数来描述任务完成要求更直观。在这样的环境中,构建奖励信号的最简单方法是对导致所有约束都满足的每个转换给予奖励。

从理论上讲,通用强化学习算法应该能够处理稀疏奖励设置。例如,Q-learning [17] 是少数几个能够保证最终找到最优策略的算法之一,前提是所有状态和动作都被无限频繁地体验。然而,从实际的角度来看,在许多稀疏奖励环境中找到解决方案可能是不可行的。Stanton 和 Clune [15] 指出,稀疏环境需要“惊人的大量”训练步骤才能通过无向探索来解决,例如,e-Q-learning 和其他 RL 算法中使用的贪婪探索。

解决稀疏奖励问题的一个有希望的方向是通过基于好奇心的探索。好奇心是一种内在动机,有大量的文献专门讨论这个话题。我们将在 Sect 中更详细地讨论基于好奇心的学习。5. 尽管内在动机领域取得了重大进展,但迄今为止还没有建立与经典 RL 算法相匹配的强最优性保证。因此,在下一节中,我们将研究一个替代方向,旨在修改奖励信号,使最优策略保持不变,但可以极大地促进学习过程。

“塑造”一词最初是由 Skinner [13] 在行为科学中引入的。整形有时也称为“逐次逼近”。ñG等。[8] 将该术语形式化并在强化学习中以“奖励塑造”的名义推广。本节详细介绍了行为科学定义和强化学习定义之间的联系,强调了奖励塑造对于改善稀疏奖励设置中的探索的优缺点。

机器学习代写|强化学习project代写reinforence learning代考|Shaping in Behavioral Science

Skinner 在他的书 [13] 中奠定了塑造的基础,他的目标是建立“行为法则”。最初,他偶然发现通过提供中间奖励可以加快对某些任务的学习。后来,他发现正是操作数(行为单位)中的不连续性导致奖励稀疏的任务更难学习。通过逐次逼近(又名整形)分解这些折扣,他可以证明可以更有效地教授期望的行为。

值得注意的是,Skinner [13] 描述的许多实验方法与 Ng 等人引入强化学习的势场方法相一致。[8] 46 年后。例如,斯金纳采用基于角度和位置的奖励来引导鸽子进入目标区域,在那里等待最终的大奖励。有趣的是,除了操纵奖励之外,斯金纳还能够通过操纵环境进一步加快赛鸽训练。通过在实验前让鸽子保持饥饿状态,他可以让鸽子以更高的概率啄食开关,从而更快地获得与该行为相关的奖励。

由于在强化学习的实际应用中操纵环境通常是工程师无法控制的,因此奖励塑造技术提供了调节学习过程的主要实用工具。下一节将仔细研究强化学习中的奖励塑造理论,并强调它与行为科学中的奖励塑造的相似之处和区别。

机器学习代写|强化学习project代写reinforence learning代考|Reward Shaping in Reinforcement Learning

尽管从业者一直在从事某种形式的奖励塑造,但 Ng 等人提出了第一个有理论基础的框架。[8] 以基于潜力的奖励塑造为名(磷乙R小号). 根据该理论,整形信号F必须是当前状态和下一个状态的函数,即F:小号× 小号→R. 将整形信号添加到原始奖励函数中以产生

新的奖励信号R′(s,一种,s′)=R(s,一种,s′)+F(s,s′). 至关重要的是,奖励塑造项F(s,s′)必须通过势函数承认表示披(s)这仅取决于一个论点。这种依赖表现为电位差的形式

F(s,s′)=C披(s′)−披(s).

当违反条件 (1) 时,应该会出现不希望的影响。例如,在 [9] 中,奖励接近目标的术语被添加到自行车骑行任务中,其中目标是开车到目标位置。结果,代理学会了绕着起点绕圈行驶。由于偏离目标不会受到惩罚,因此这种策略确实是最优的。基于电位的成形项 (1) 会阻止这种循环解决方案。

比较 Skinner 的 Sect 塑造方法。4.1对于 PRBS 来说,鸽子转向或朝正确方向移动的奖励可以看作是基于方向和距离目标的势场产生的。然而,从[13] 中的描述来看,尚不清楚是否也因朝错误的方向移动而给予惩罚(即负奖励)。如果不是,那么斯金纳的鸽子一定遇到了与 [9] 中骑自行车的人相同的问题。然而,没有观察到这种行为,这可能是由于动物的贴现因子低或因能量消耗而受到内部惩罚。

尽管它很有吸引力,但一些研究人员认为奖励塑造相当消极。如 [9] 中所述,奖励塑造违背了“白纸”理想——要求智能体使用通用(无模型 RL)算法从头开始学习——通过将先验知识注入问题中。萨顿和巴托 [16, p。[54] 支持这一观点,指出

奖励信号不是向代理传授有关如何实现我们想要它做的事情的先验知识的地方。

如以下小节中的示例所示,通常也很难提出良好的塑造奖励。尤其是整形项F(s,s′)是特定于问题的,并且对于奖励的每个选择R(s,一种,s′)需要重新设计。昆虫。5,我们考虑更一般的塑造方法,只依赖于环境动态而不是奖励函数。

统计代写请认准statistics-lab™. statistics-lab™为您的留学生涯保驾护航。

金融工程代写

金融工程是使用数学技术来解决金融问题。金融工程使用计算机科学、统计学、经济学和应用数学领域的工具和知识来解决当前的金融问题,以及设计新的和创新的金融产品。

非参数统计代写

非参数统计指的是一种统计方法,其中不假设数据来自于由少数参数决定的规定模型;这种模型的例子包括正态分布模型和线性回归模型。

广义线性模型代考

广义线性模型(GLM)归属统计学领域,是一种应用灵活的线性回归模型。该模型允许因变量的偏差分布有除了正态分布之外的其它分布。

术语 广义线性模型(GLM)通常是指给定连续和/或分类预测因素的连续响应变量的常规线性回归模型。它包括多元线性回归,以及方差分析和方差分析(仅含固定效应)。

有限元方法代写

有限元方法(FEM)是一种流行的方法,用于数值解决工程和数学建模中出现的微分方程。典型的问题领域包括结构分析、传热、流体流动、质量运输和电磁势等传统领域。

有限元是一种通用的数值方法,用于解决两个或三个空间变量的偏微分方程(即一些边界值问题)。为了解决一个问题,有限元将一个大系统细分为更小、更简单的部分,称为有限元。这是通过在空间维度上的特定空间离散化来实现的,它是通过构建对象的网格来实现的:用于求解的数值域,它有有限数量的点。边界值问题的有限元方法表述最终导致一个代数方程组。该方法在域上对未知函数进行逼近。[1] 然后将模拟这些有限元的简单方程组合成一个更大的方程系统,以模拟整个问题。然后,有限元通过变化微积分使相关的误差函数最小化来逼近一个解决方案。

tatistics-lab作为专业的留学生服务机构,多年来已为美国、英国、加拿大、澳洲等留学热门地的学生提供专业的学术服务,包括但不限于Essay代写,Assignment代写,Dissertation代写,Report代写,小组作业代写,Proposal代写,Paper代写,Presentation代写,计算机作业代写,论文修改和润色,网课代做,exam代考等等。写作范围涵盖高中,本科,研究生等海外留学全阶段,辐射金融,经济学,会计学,审计学,管理学等全球99%专业科目。写作团队既有专业英语母语作者,也有海外名校硕博留学生,每位写作老师都拥有过硬的语言能力,专业的学科背景和学术写作经验。我们承诺100%原创,100%专业,100%准时,100%满意。

随机分析代写

随机微积分是数学的一个分支,对随机过程进行操作。它允许为随机过程的积分定义一个关于随机过程的一致的积分理论。这个领域是由日本数学家伊藤清在第二次世界大战期间创建并开始的。

时间序列分析代写

随机过程,是依赖于参数的一组随机变量的全体,参数通常是时间。 随机变量是随机现象的数量表现,其时间序列是一组按照时间发生先后顺序进行排列的数据点序列。通常一组时间序列的时间间隔为一恒定值(如1秒,5分钟,12小时,7天,1年),因此时间序列可以作为离散时间数据进行分析处理。研究时间序列数据的意义在于现实中,往往需要研究某个事物其随时间发展变化的规律。这就需要通过研究该事物过去发展的历史记录,以得到其自身发展的规律。

回归分析代写

多元回归分析渐进(Multiple Regression Analysis Asymptotics)属于计量经济学领域,主要是一种数学上的统计分析方法,可以分析复杂情况下各影响因素的数学关系,在自然科学、社会和经济学等多个领域内应用广泛。

MATLAB代写

MATLAB 是一种用于技术计算的高性能语言。它将计算、可视化和编程集成在一个易于使用的环境中,其中问题和解决方案以熟悉的数学符号表示。典型用途包括:数学和计算算法开发建模、仿真和原型制作数据分析、探索和可视化科学和工程图形应用程序开发,包括图形用户界面构建MATLAB 是一个交互式系统,其基本数据元素是一个不需要维度的数组。这使您可以解决许多技术计算问题,尤其是那些具有矩阵和向量公式的问题,而只需用 C 或 Fortran 等标量非交互式语言编写程序所需的时间的一小部分。MATLAB 名称代表矩阵实验室。MATLAB 最初的编写目的是提供对由 LINPACK 和 EISPACK 项目开发的矩阵软件的轻松访问,这两个项目共同代表了矩阵计算软件的最新技术。MATLAB 经过多年的发展,得到了许多用户的投入。在大学环境中,它是数学、工程和科学入门和高级课程的标准教学工具。在工业领域,MATLAB 是高效研究、开发和分析的首选工具。MATLAB 具有一系列称为工具箱的特定于应用程序的解决方案。对于大多数 MATLAB 用户来说非常重要,工具箱允许您学习和应用专业技术。工具箱是 MATLAB 函数(M 文件)的综合集合,可扩展 MATLAB 环境以解决特定类别的问题。可用工具箱的领域包括信号处理、控制系统、神经网络、模糊逻辑、小波、仿真等。