统计代写|线性回归分析代写linear regression analysis代考|ESTIMATED VARIANCES

如果你也在 怎样代写线性回归Linear Regression 这个学科遇到相关的难题,请随时右上角联系我们的24/7代写客服。线性回归Linear Regression在统计学中,是对标量响应和一个或多个解释变量(也称为因变量和自变量)之间的关系进行建模的一种线性方法。一个解释变量的情况被称为简单线性回归;对于一个以上的解释变量,这一过程被称为多元线性回归。这一术语不同于多元线性回归,在多元线性回归中,预测的是多个相关的因变量,而不是一个标量变量。

线性回归Linear Regression在线性回归中,关系是用线性预测函数建模的,其未知的模型参数是根据数据估计的。最常见的是,假设给定解释变量(或预测因子)值的响应的条件平均值是这些值的仿生函数;不太常见的是,使用条件中位数或其他一些量化指标。像所有形式的回归分析一样,线性回归关注的是给定预测因子值的反应的条件概率分布,而不是所有这些变量的联合概率分布,这是多元分析的领域。

statistics-lab™ 为您的留学生涯保驾护航 在代写线性回归分析linear regression analysis方面已经树立了自己的口碑, 保证靠谱, 高质且原创的统计Statistics代写服务。我们的专家在代写线性回归分析linear regression analysis代写方面经验极为丰富,各种代写线性回归分析linear regression analysis相关的作业也就用不着说。

统计代写|线性回归分析代写linear regression analysis代考|ESTIMATED VARIANCESz

Estimates of $\operatorname{Var}\left(\hat{\beta}_0\right)$ and $\operatorname{Var}\left(\hat{\beta}_1\right)$ are obtained by substituting $\hat{\sigma}^2$ for $\sigma^2$ in (2.11). We use the symbol $\widehat{\operatorname{Var}}($ ) for an estimated variance. Thus

$$

\begin{aligned}

& \widehat{\operatorname{Var}}\left(\hat{\beta}_1\right)=\hat{\sigma}^2 \frac{1}{S X X} \

& \widehat{\operatorname{Var}}\left(\hat{\beta}_0\right)=\hat{\sigma}^2\left(\frac{1}{n}+\frac{\bar{x}^2}{S X X}\right)

\end{aligned}

$$

The square root of an estimated variance is called a standard error, for which we use the symbol se( ). The use of this notation is illustrated by

$$

\operatorname{se}\left(\hat{\beta}_1\right)=\sqrt{\widehat{\operatorname{Var}}\left(\hat{\beta}_1\right)}

$$

统计代写|线性回归分析代写linear regression analysis代考|COMPARING MODELS: THE ANALYSIS OF VARIANCE

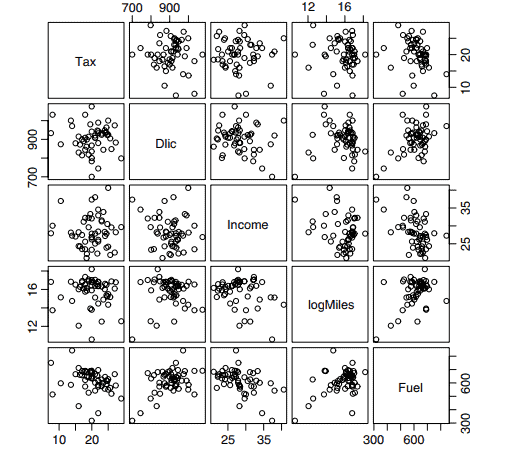

The analysis of variance provides a convenient method of comparing the fit of two or more mean functions for the same set of data. The methodology developed here is very useful in multiple regression and, with minor modification, in most regression problems.

An elementary alternative to the simple regression model suggests fitting the mean function

$$

\mathrm{E}(Y \mid X=x)=\beta_0

$$

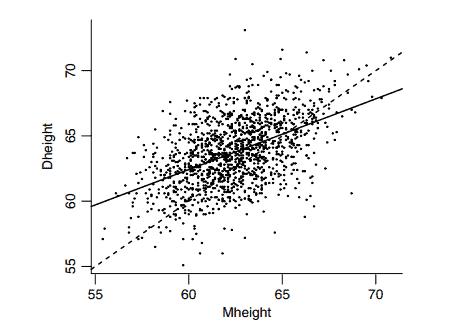

The mean function (2.13) is the same for all values of $X$. Fitting with this mean function is equivalent to finding the best line parallel to the horizontal or $x$-axis, as shown in Figure 2.4. The ols estimate of the mean function is $\widehat{E(Y \mid X)}=\hat{\beta}_0$, where $\hat{\beta}_0$ is the value of $\beta_0$ that minimizes $\sum\left(y_i-\beta_0\right)^2$. The minimizer is given by

$$

\hat{\beta}_0=\bar{y}

$$

The residual sum of squares is

$$

\sum\left(y_i-\hat{\beta}_0\right)^2=\sum\left(y_i-\bar{y}\right)^2=S Y Y

$$

This residual sum of squares has $n-1$ df, $n$ cases minus one parameter in the mean function.

Next, consider the simple regression mean function obtained from (2.13) by adding a term that depends on $X$

$$

\mathrm{E}(Y \mid X=x)=\beta_0+\beta_1 x

$$

Fitting this mean function is equivalent to finding the best line of arbitrary slope, as shown in Figure 2.4. The ols estimates for this mean function are given by (2.5). The estimates of $\beta_0$ under the two mean functions are different, just as the meaning of $\beta_0$ in the two mean functions is different. For (2.13), $\beta_0$ is the average of the $y_i \mathrm{~s}$, but for (2.16), $\beta_0$ is the expected value of $Y$ when $X=0$.

线性回归代写

统计代写|线性回归分析代写linear regression analysis代考|ESTIMATED VARIANCESz

通过将式(2.11)中的$\sigma^2$代入$\hat{\sigma}^2$得到$\operatorname{Var}\left(\hat{\beta}_0\right)$和$\operatorname{Var}\left(\hat{\beta}_1\right)$的估计值。我们使用符号$\widehat{\operatorname{Var}}($)表示估计的方差。因此

$$

\begin{aligned}

& \widehat{\operatorname{Var}}\left(\hat{\beta}_1\right)=\hat{\sigma}^2 \frac{1}{S X X} \

& \widehat{\operatorname{Var}}\left(\hat{\beta}_0\right)=\hat{\sigma}^2\left(\frac{1}{n}+\frac{\bar{x}^2}{S X X}\right)

\end{aligned}

$$

估计方差的平方根称为标准误差,我们用符号se()表示。这个符号的用法由

$$

\operatorname{se}\left(\hat{\beta}_1\right)=\sqrt{\widehat{\operatorname{Var}}\left(\hat{\beta}_1\right)}

$$

统计代写|线性回归分析代写linear regression analysis代考|COMPARING MODELS: THE ANALYSIS OF VARIANCE

方差分析为比较同一组数据的两个或多个均值函数的拟合提供了一种方便的方法。这里开发的方法在多元回归中非常有用,在大多数回归问题中也非常有用。

简单回归模型的一个基本替代方法是拟合均值函数

$$

\mathrm{E}(Y \mid X=x)=\beta_0

$$

对于$X$的所有值,均值函数(2.13)是相同的。用这个均值函数进行拟合相当于找到平行于水平线或$x$ -轴的最佳直线,如图2.4所示。均值函数的ols估计是$\widehat{E(Y \mid X)}=\hat{\beta}_0$,其中$\hat{\beta}_0$是使$\sum\left(y_i-\beta_0\right)^2$最小化的$\beta_0$的值。最小值由

$$

\hat{\beta}_0=\bar{y}

$$

残差平方和为

$$

\sum\left(y_i-\hat{\beta}_0\right)^2=\sum\left(y_i-\bar{y}\right)^2=S Y Y

$$

这个残差平方和有$n-1$ df, $n$个案例减去平均函数中的一个参数。

接下来,考虑从(2.13)中通过添加一个依赖于$X$的项得到的简单回归均值函数

$$

\mathrm{E}(Y \mid X=x)=\beta_0+\beta_1 x

$$

拟合该均值函数相当于找到任意斜率的最佳直线,如图2.4所示。该均值函数的ols估计由(2.5)给出。$\beta_0$在两个均值函数下的估计是不同的,正如$\beta_0$在两个均值函数中的含义不同一样。对于(2.13),$\beta_0$是$y_i \mathrm{~s}$的平均值,但对于(2.16),$\beta_0$是$X=0$时$Y$的期望值。

统计代写请认准statistics-lab™. statistics-lab™为您的留学生涯保驾护航。

随机过程代考

在概率论概念中,随机过程是随机变量的集合。 若一随机系统的样本点是随机函数,则称此函数为样本函数,这一随机系统全部样本函数的集合是一个随机过程。 实际应用中,样本函数的一般定义在时间域或者空间域。 随机过程的实例如股票和汇率的波动、语音信号、视频信号、体温的变化,随机运动如布朗运动、随机徘徊等等。

贝叶斯方法代考

贝叶斯统计概念及数据分析表示使用概率陈述回答有关未知参数的研究问题以及统计范式。后验分布包括关于参数的先验分布,和基于观测数据提供关于参数的信息似然模型。根据选择的先验分布和似然模型,后验分布可以解析或近似,例如,马尔科夫链蒙特卡罗 (MCMC) 方法之一。贝叶斯统计概念及数据分析使用后验分布来形成模型参数的各种摘要,包括点估计,如后验平均值、中位数、百分位数和称为可信区间的区间估计。此外,所有关于模型参数的统计检验都可以表示为基于估计后验分布的概率报表。

广义线性模型代考

广义线性模型(GLM)归属统计学领域,是一种应用灵活的线性回归模型。该模型允许因变量的偏差分布有除了正态分布之外的其它分布。

statistics-lab作为专业的留学生服务机构,多年来已为美国、英国、加拿大、澳洲等留学热门地的学生提供专业的学术服务,包括但不限于Essay代写,Assignment代写,Dissertation代写,Report代写,小组作业代写,Proposal代写,Paper代写,Presentation代写,计算机作业代写,论文修改和润色,网课代做,exam代考等等。写作范围涵盖高中,本科,研究生等海外留学全阶段,辐射金融,经济学,会计学,审计学,管理学等全球99%专业科目。写作团队既有专业英语母语作者,也有海外名校硕博留学生,每位写作老师都拥有过硬的语言能力,专业的学科背景和学术写作经验。我们承诺100%原创,100%专业,100%准时,100%满意。

机器学习代写

随着AI的大潮到来,Machine Learning逐渐成为一个新的学习热点。同时与传统CS相比,Machine Learning在其他领域也有着广泛的应用,因此这门学科成为不仅折磨CS专业同学的“小恶魔”,也是折磨生物、化学、统计等其他学科留学生的“大魔王”。学习Machine learning的一大绊脚石在于使用语言众多,跨学科范围广,所以学习起来尤其困难。但是不管你在学习Machine Learning时遇到任何难题,StudyGate专业导师团队都能为你轻松解决。

多元统计分析代考

基础数据: $N$ 个样本, $P$ 个变量数的单样本,组成的横列的数据表

变量定性: 分类和顺序;变量定量:数值

数学公式的角度分为: 因变量与自变量

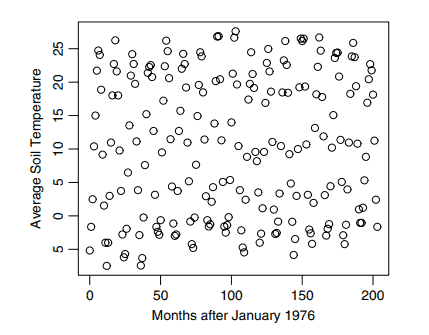

时间序列分析代写

随机过程,是依赖于参数的一组随机变量的全体,参数通常是时间。 随机变量是随机现象的数量表现,其时间序列是一组按照时间发生先后顺序进行排列的数据点序列。通常一组时间序列的时间间隔为一恒定值(如1秒,5分钟,12小时,7天,1年),因此时间序列可以作为离散时间数据进行分析处理。研究时间序列数据的意义在于现实中,往往需要研究某个事物其随时间发展变化的规律。这就需要通过研究该事物过去发展的历史记录,以得到其自身发展的规律。

回归分析代写

多元回归分析渐进(Multiple Regression Analysis Asymptotics)属于计量经济学领域,主要是一种数学上的统计分析方法,可以分析复杂情况下各影响因素的数学关系,在自然科学、社会和经济学等多个领域内应用广泛。

MATLAB代写

MATLAB 是一种用于技术计算的高性能语言。它将计算、可视化和编程集成在一个易于使用的环境中,其中问题和解决方案以熟悉的数学符号表示。典型用途包括:数学和计算算法开发建模、仿真和原型制作数据分析、探索和可视化科学和工程图形应用程序开发,包括图形用户界面构建MATLAB 是一个交互式系统,其基本数据元素是一个不需要维度的数组。这使您可以解决许多技术计算问题,尤其是那些具有矩阵和向量公式的问题,而只需用 C 或 Fortran 等标量非交互式语言编写程序所需的时间的一小部分。MATLAB 名称代表矩阵实验室。MATLAB 最初的编写目的是提供对由 LINPACK 和 EISPACK 项目开发的矩阵软件的轻松访问,这两个项目共同代表了矩阵计算软件的最新技术。MATLAB 经过多年的发展,得到了许多用户的投入。在大学环境中,它是数学、工程和科学入门和高级课程的标准教学工具。在工业领域,MATLAB 是高效研究、开发和分析的首选工具。MATLAB 具有一系列称为工具箱的特定于应用程序的解决方案。对于大多数 MATLAB 用户来说非常重要,工具箱允许您学习和应用专业技术。工具箱是 MATLAB 函数(M 文件)的综合集合,可扩展 MATLAB 环境以解决特定类别的问题。可用工具箱的领域包括信号处理、控制系统、神经网络、模糊逻辑、小波、仿真等。