如果你也在 怎样代写密码学与网络安全cryptography and network security这个学科遇到相关的难题,请随时右上角联系我们的24/7代写客服。

密码学是对安全通信技术的研究,它只允许信息的发送者和预定接收者查看其内容。

statistics-lab™ 为您的留学生涯保驾护航 在代写密码学与网络安全cryptography and network security方面已经树立了自己的口碑, 保证靠谱, 高质且原创的统计Statistics代写服务。我们的专家在代写密码学与网络安全cryptography and network security代写方面经验极为丰富,各种代写密码学与网络安全cryptography and network security相关的作业也就用不着说。

我们提供的密码学与网络安全cryptography and network security及其相关学科的代写,服务范围广, 其中包括但不限于:

- Statistical Inference 统计推断

- Statistical Computing 统计计算

- Advanced Probability Theory 高等概率论

- Advanced Mathematical Statistics 高等数理统计学

- (Generalized) Linear Models 广义线性模型

- Statistical Machine Learning 统计机器学习

- Longitudinal Data Analysis 纵向数据分析

- Foundations of Data Science 数据科学基础

计算机代写|密码学与网络安全代写cryptography and network security代考|Requirements for an Information Metric

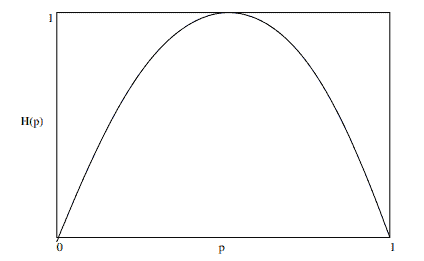

A few fundamental properties are necessary for the entropy in order to obtain an axiomatic approach to base the information measurement (Reza, 1961).

- If the event probabilities suffer a small change, the associated measure must change in accordance, in a continuous manner, which provides a physical meaning to the metric

$H\left(p_1, p_2, \ldots, p_N\right)$ is continuous in $p_k, k=1,2, \ldots, N$, (3.7) $0 \leq p_k \leq 1$. - The information measure must be symmetric in relation to the probability set $P$. The is, the entropy is invariant to the order of events

$$

H\left(p_1, p_2, p_3, \ldots, p_N\right)=H\left(p_1, p_3, p_2, \ldots, p_N\right)

$$ The maximum of the entropy is obtained when the events are equally probable. That is, when nothing is known about the set of events, or about what message has been produced, the assumption of a uniform distribution gives the highest information quantity that corresponds to the highest level of uncertainty - Maximum of $H\left(p_1, p_2, \ldots, p_N\right)=H\left(\frac{1}{N}, \frac{1}{N}, \ldots, \frac{1}{N}\right)$.

- Example: Consider two sources that emit four symbols. The first source symbols, shown in Table 3.2, have equal probabilities, and the second source symbols, shown in Table 3.3, are produced with unequal probabilities.

- The mentioned property indicates that the first source attains the highest level of uncertainty, regardless of the probability values of the second source, as long as they are different.

- Consider that an adequate measure for the average uncertainty has been found $H\left(p_1, p_2, \ldots, p_N\right)$ associated with a set of events. Assume that event $\left{x_N\right}$ is divided into $M$ disjoint sets, with probabilities $q_k$, such that

- $$

- p_N=\sum_{k=1}^M q_k,

- $$ and the probabilities associated with the new events can be normalized in such a way that

- $$

- \frac{q_1}{p_n}+\frac{q_2}{p_n}+\cdots+\frac{q_m}{p_n}=1 .

- $$

计算机代写|密码学与网络安全代写cryptography and network security代考|Source Coding

The efficient representation of data produced by a discrete source is called source coding. For a source coder to obtain a good performance, it is necessary to take the symbol statistics into account. If the symbol probabilities are different, it is useful to assign short codewords to probable symbols and long ones to infrequent symbols. This produces a variable length code, such as the Morse code.

Two usual requirements to build an efficient code are:

- The codewords generated by the coder are binary.

- The codewords are unequivocally decodable, and the original message sequence can be reconstructed from the binary coded sequence.

Consider Figure 3.2, which shows a memoryless discrete source, whose output $x_k$ is converted by the source coder into a sequence of 0 s and $1 \mathrm{~s}$, denoted $b_k$. Assume that the source alphabet has $K$ different symbol and that the $k$-ary symbol, $x_k$, occurs with probability $p_k, k=0,1, \ldots, K-1$.

Let $l_k$ be the average length, measured in bits, of the binary word assigned to symbol $x_k$. The avcrage length of the words produccd by the source coder is defined as (Haykin, 1988)

$$

\bar{L}s=\sum{k=1}^K p_k l_k .

$$

The parameter $\bar{L}$ represents the average number of bits per symbol from those that are used in the source coding process. Let $L_{\min }$ be the smallest possible value of $\bar{L}$. The source coding efficiency is defined as (Haykin, 1988)

$$

\eta=\frac{L_{\min }}{\bar{L}} .

$$

Because $\bar{L} \geq L_{\min }$, then $\eta \leq 1$. The source coding efficiency increases as $\eta$ approaches 1 .

Shannon’s first theorem, or source coding theorem, provides a means to determine $L_{\min }$ (Haykin, 1988).

Given a memoryless discrete source with entropy $H(X)$, the average length of the codewords is limited by

$$

\bar{L} \geq H(X) .

$$

Entropy $H(X)$, therefore, represents a fundamental limit for the average number of bits per source symbol $\bar{L}$, that are needed to represent a memoryless discrete source, and this number can be as small as, but never smaller than, the source entropy $H(X)$.

密码学与网络安全代考

计算机代写|密码学与网络安全代写密码学和网络安全代考|信息指标要求

为了获得信息测量的公理方法(Reza, 1961),熵的一些基本性质是必要的

- 如果事件概率发生了微小的变化,那么相关的度量必须以连续的方式进行相应的变化,这为度量

提供了物理意义$H\left(p_1, p_2, \ldots, p_N\right)$ 是连续的 $p_k, k=1,2, \ldots, N$, (3.7) $0 \leq p_k \leq 1$ - 信息度量必须与概率集对称 $P$。是,熵对事件

的顺序是不变的$$

H\left(p_1, p_2, p_3, \ldots, p_N\right)=H\left(p_1, p_3, p_2, \ldots, p_N\right)

$$ 当事件等可能时,得到熵的最大值。也就是说,当对事件集或已产生的消息一无所知时,均匀分布的假设给出了与最高不确定性水平对应的最高信息量 - 的最大值 $H\left(p_1, p_2, \ldots, p_N\right)=H\left(\frac{1}{N}, \frac{1}{N}, \ldots, \frac{1}{N}\right)$

- 示例:考虑两个发出四个符号的源。表3.2所示的第一个源符号具有等概率,表3.3所示的第二个源符号具有等概率。上述属性表明,无论第二个源的概率值如何,只要它们不同,第一个源的不确定性水平最高。

- 考虑已经找到了平均不确定度的适当测度 $H\left(p_1, p_2, \ldots, p_N\right)$ 与一组事件相关联。假设那个事件 $\left{x_N\right}$ 分为 $M$ 不相交集,有概率 $q_k$,使

- $$

- p_N=\sum_{k=1}^M q_k,

- $$ 与新事件相关的概率可以标准化为

- $$

- \frac{q_1}{p_n}+\frac{q_2}{p_n}+\cdots+\frac{q_m}{p_n}=1 .

- $$

计算机代写|密码学与网络安全代写cryptography and network security代考|Source Coding

.源编码

离散源产生的数据的有效表示称为源编码。为了使源代码获得良好的性能,必须考虑符号统计量。如果符号概率不同,将短码字分配给可能的符号,将长码字分配给不常见的符号是有用的。这就产生了可变长度的码,如莫尔斯电码。构建有效代码的两个通常要求:

- 编码器生成的码字为二进制。

- 码字是明确可解码的,由二进制编码序列可以重构出原始的消息序列。

考虑图3.2,其中显示了一个无记忆的离散源,其输出$x_k$由源代码编码器转换为0 s和$1 \mathrm{~s}$组成的序列,表示为$b_k$。假设源字母表有$K$不同的符号,$k$ -ary符号$x_k$以$p_k, k=0,1, \ldots, K-1$的概率出现。

设$l_k$为分配给符号$x_k$的二进制字的平均长度,以位为单位。由源代码编码器产生的单词的平均长度定义为(Haykin, 1988)

$$

\bar{L}s=\sum{k=1}^K p_k l_k .

$$

参数$\bar{L}$表示源编码过程中使用的每个符号的平均比特数。设$L_{\min }$为$\bar{L}$的最小值。源编码效率定义为(Haykin, 1988)

$$

\eta=\frac{L_{\min }}{\bar{L}} .

$$

因为$\bar{L} \geq L_{\min }$,那么$\eta \leq 1$。当$\eta$接近1时,源编码效率提高

香农第一定理,或源编码定理,提供了确定$L_{\min }$的方法(Haykin, 1988) 给定一个熵为$H(X)$的无记忆离散源,码字的平均长度受

$$

\bar{L} \geq H(X) .

$$

熵$H(X)$的限制,因此,表示每个源符号$\bar{L}$所需的平均比特数的基本限制,这个数字可以小到,但绝不小于源熵$H(X)$。