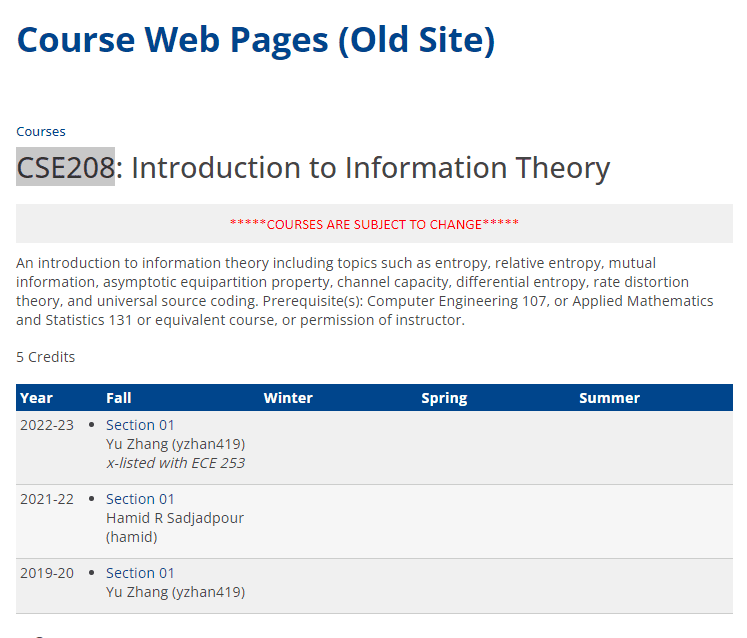

Statistics-lab™可以为您提供ucsc.edu CSE208 information theory信息论的代写代考和辅导服务!

CSE208 information theory课程简介

Information theory is the branch of mathematics that deals with the quantification, storage, and communication of information. It was developed by Claude Shannon in the 1940s, and has applications in a wide range of fields, including communications, computer science, physics, and biology.

The basic idea in information theory is that information can be thought of as a reduction in uncertainty. The more uncertain we are about something, the more information we gain when we learn about it. For example, if I tell you that the weather tomorrow will be either sunny or rainy, and you don’t know which one, then your uncertainty about the weather is high. If I then tell you that the weather will be sunny, then your uncertainty is reduced, and you gain some information.

PREREQUISITES

Information theory is the branch of mathematics that deals with the quantification, storage, and communication of information. It was developed by Claude Shannon in the 1940s, and has applications in a wide range of fields, including communications, computer science, physics, and biology.

The basic idea in information theory is that information can be thought of as a reduction in uncertainty. The more uncertain we are about something, the more information we gain when we learn about it. For example, if I tell you that the weather tomorrow will be either sunny or rainy, and you don’t know which one, then your uncertainty about the weather is high. If I then tell you that the weather will be sunny, then your uncertainty is reduced, and you gain some information.

CSE208 information theory HELP(EXAM HELP, ONLINE TUTOR)

- Coin flips. A fair coin is flipped until the first head occurs. Let $X$ denote the number of flips required.

(a) Find the entropy $H(X)$ in bits. The following expressions may be useful:

$$

\sum_{n=1}^{\infty} r^n=\frac{r}{1-r}, \quad \sum_{n=1}^{\infty} n r^n=\frac{r}{(1-r)^2} .

$$

(b) A random variable $X$ is drawn according to this distribution. Find an “efficient” sequence of yes-no questions of the form, “Is $X$ contained in the set $S$ ?” Compare $H(X)$ to the expected number of questions required to determine $X$.

(a) The number $X$ of tosses till the first head appears has the geometric distribution with parameter $p=1 / 2$, where $P(X=n)=p q^{n-1}, n \in{1,2, \ldots}$. Hence the entropy of $X$ is

$$

\begin{aligned}

H(X) & =-\sum_{n=1}^{\infty} p q^{n-1} \log \left(p q^{n-1}\right) \

& =-\left[\sum_{n=0}^{\infty} p q^n \log p+\sum_{n=0}^{\infty} n p q^n \log q\right] \

& =\frac{-p \log p}{1-q}-\frac{p q \log q}{p^2} \

& =\frac{-p \log p-q \log q}{p} \

& =H(p) / p \text { bits. }

\end{aligned}

$$

If $p=1 / 2$, then $H(X)=2$ bits.

(b) Intuitively, it seems clear that the best questions are those that have equally likely chances of receiving a yes or a no answer. Consequently, one possible guess is that the most “efficient” series of questions is: Is $X=1$ ? If not, is $X=2$ ? If not, is $X=3$ ? … with a resulting expected number of questions equal to $\sum_{n=1}^{\infty} n\left(1 / 2^n\right)=2$. This should reinforce the intuition that $H(X)$ is a measure of the uncertainty of $X$. Indeed in this case, the entropy is exactly the same as the average number of questions needed to define $X$, and in general $E$ (# of questions) $\geq H(X)$. This problem has an interpretation as a source coding problem. Let $0=$ no, $1=$ yes, $X=$ Source, and $Y=$ Encoded Source. Then the set of questions in the above procedure can be written as a collection of $(X, Y)$ pairs: $(1,1),(2,01),(3,001)$, etc. . In fact, this intuitively derived code is the optimal (Huftman) code minimizing the expected number of questions.

- Entropy of functions. Let $X$ be a random variable taking on a finite number of values. What is the (general) inequality relationship of $H(X)$ and $H(Y)$ if

(a) $Y=2^X$ ?

(b) $Y=\cos X$ ?

Solution: Let $y=g(x)$. Then

$$

p(y)=\sum_{x: y=g(x)} p(x)

$$

Consider any set of $x$ ‘s that map onto a single $y$. For this set

$$

\sum_{x: y=g(x)} p(x) \log p(x) \leq \sum_{x: y=g(x)} p(x) \log p(y)=p(y) \log p(y),

$$

since $\log$ is a monotone increasing function and $p(x) \leq \sum_{x: y=g(x)} p(x)=p(y)$. Extending this argument to the entire range of $X$ (and $Y$ ), we obtain

$$

\begin{aligned}

H(X) & =-\sum_x p(x) \log p(x) \

& =-\sum_y \sum_{x: y=g(x)} p(x) \log p(x) \

& \geq-\sum_y p(y) \log p(y) \

& =H(Y)

\end{aligned}

$$

with equality iff $g$ is one-to-one with probability one.

(a) $Y=2^X$ is one-to-one and hence the entropy, which is just a function of the probabilities (and not the values of a random variable) does not change, i.e., $H(X)=H(Y)$

(b) $Y=\cos (X)$ is not necessarily one-to-one. Hence all that we can say is that $H(X) \geq H(Y)$, with equality if cosine is one-to-one on the range of $X$.

Textbooks

• An Introduction to Stochastic Modeling, Fourth Edition by Pinsky and Karlin (freely

available through the university library here)

• Essentials of Stochastic Processes, Third Edition by Durrett (freely available through

the university library here)

To reiterate, the textbooks are freely available through the university library. Note that

you must be connected to the university Wi-Fi or VPN to access the ebooks from the library

links. Furthermore, the library links take some time to populate, so do not be alarmed if

the webpage looks bare for a few seconds.

Statistics-lab™可以为您提供ucsc.edu CSE208 information theory信息论的代写代考和辅导服务! 请认准Statistics-lab™. Statistics-lab™为您的留学生涯保驾护航。