如果你也在 怎样代写多元统计分析Multivariate Statistical Analysis这个学科遇到相关的难题,请随时右上角联系我们的24/7代写客服。

多变量统计分析被认为是评估地球化学异常与任何单独变量和变量之间相互影响的意义的有用工具。

statistics-lab™ 为您的留学生涯保驾护航 在代写多元统计分析Multivariate Statistical Analysis方面已经树立了自己的口碑, 保证靠谱, 高质且原创的统计Statistics代写服务。我们的专家在代写多元统计分析Multivariate Statistical Analysis代写方面经验极为丰富,各种代写多元统计分析Multivariate Statistical Analysis相关的作业也就用不着说。

我们提供的多元统计分析Multivariate Statistical Analysis及其相关学科的代写,服务范围广, 其中包括但不限于:

- Statistical Inference 统计推断

- Statistical Computing 统计计算

- Advanced Probability Theory 高等概率论

- Advanced Mathematical Statistics 高等数理统计学

- (Generalized) Linear Models 广义线性模型

- Statistical Machine Learning 统计机器学习

- Longitudinal Data Analysis 纵向数据分析

- Foundations of Data Science 数据科学基础

统计代写|多元统计分析代写Multivariate Statistical Analysis代考|Data Analysis Strategies and Statistical Thinking

The main goal of statistics is to learn from data in order for us to make good decisions and to understand real world problems. In data analysis, the most important skill for a statistician is to develop the ability of statistical thinking: how to obtain good data, how to choose appropriate methods to analyze the data, and how to interpret analysis results and draw reliable conclusions. It takes time to develop such skills since real understanding of statistical methods is harder than one may imagine. Statistical thinking is different from mathematical thinking, since mathematics often involves either black or white (i.e., right or wrong) while statistics may involve many grey areas which may not be as simple as either right or wrong. Therefore, in statistics sometimes it may be more important to understand the concepts, models, and methods than to do mathematical derivations or proofs. Statistics is becoming one of the most important subjects in modern world since many important decisions in almost all fields in modern world are based on information from data, obtained via data analysis. As Samuel Wells wrote “Statistical thinking will one day be as necessary for efficient citizenship as the ability to read and write”.

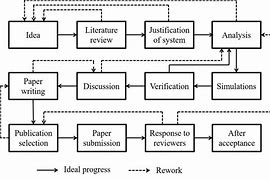

In data analysis, it is desirable to follow certain procedures. These procedures are reflections of statistical thinking. Specifically, a good statistical analysis should consist of the following steps:

- objectives.

- data collection.

- exploratory analysis.

- confirmatory analysis.

- interpretation of results.

- conclusions.

Before collecting data, we should be clear about the study objectives, which allow us to decide how to collect data. Once the objectives are clear, the next step is to decide how to collect data. Getting good data is an important step, since there is not much

The main goal of statistics is to learn from data in order for us to make good decisions and to understand real world problems. In data analysis, the most important skill for a statistician is to develop the ability of statistical thinking: how to obtain good data, how to choose appropriate methods to analyze the data, and how to interpret analysis results and draw reliable conclusions. It takes time to develop such skills since real understanding of statistical methods is harder than one may imagine. Statistical thinking is different from mathematical thinking, since mathematics often involves either black or white (i.e., right or wrong) while statistics may involve many grey areas which may not be as simple as either right or wrong. Therefore, in statistics sometimes it may be more important to understand the concepts, models, and methods than to do mathematical derivations or proofs. Statistics is becoming one of the most important subjects in modern world since many important decisions in almost all fields in modern world are based on information from data, obtained via data analysis. As Samuel Wells wrote “Statistical thinking will one day be as necessary for efficient citizenship as the ability to read and write”.

In data analysis, it is desirable to follow certain procedures. These procedures are reflections of statistical thinking. Specifically, a good statistical analysis should consist of the following steps:

- objectives.

- data collection.

- exploratory analysis.

- confirmatory analysis.

- interpretation of results.

- conclusions.

Before collecting data, we should be clear about the study objectives, which allow us to decide how to collect data. Once the objectives are clear, the next step is to decide how to collect data. Getting good data is an important step, since there is not much statistics can do if the data is poorly collected. There are generally two ways to collect data: designed experiments or observation studies (e.g., sample surveys). Designed experiments often involve randomization which allows us to make causal inference. Observational studies such as sample surveys allow us to find associations. Nowadays, there are many other ways that massive data are automatically generated, such as data from internet and records from business transactions. A good understanding of how the data are generated can help us to make reliable conclusions from data analysis.

统计代写|多元统计分析代写Multivariate Statistical Analysis代考|Outline

The topics for multivariate analysis can be quite extensive, since many statistical models and methods involving more than one variables may be viewed as multivariate analysis in a general sense. In some classic textbooks, multivariate analysis focuses mostly on models and methods for multivariate normal distributions with i.i.d. data. Such a focus allows theoretical developments since multivariate normal distributions have many nice properties and have elegant mathematical expressions. In practice, however, real data are highly complex. The multivariate normal distribution assumption may not hold for some real world problems. This book focuses more on how to analyze data from real world problems. In practice, the data may not follow normal distributions, may be discrete, and may not be independent. We select the topics

which are among the most commonly used in practice. Due to space limitation, some topics, which may also be important in practice, have to be omitted.

Chapters 2 to 4 may be viewed as exploratory multivariate analysis for continuous data. The goal is to reduce the dimension of multivariate data or to classify multivariate observations. Distributional assumptions may not be required, although in some cases we may assume multivariate normal distributions for inference. Chapter 5 considers statistical inference for multivariate normal distributions, including hypothesis testing for the mean vector and covariance matrix, which is often a major focus in classical textbooks. Chapter 6 reviews methods for multivariate discrete data, including inference for contingency tables. Chapter 7 briefly introduces Copula models, which are useful models for non-normal multivariate data. Chapters 8 to 10 briefly review linear and generalized linear regression models, with MANOVA models being viewed as special linear models. Chapter 11 shows models for dependent data, especial models for repeated measurements and longitudinal data. In Chapter 12 , we discuss how to handle missing data in multivariate datasets since missing data are very common in practice and they can have substantial effects on analysis results. Chapter 13 briefly describes robust methods for multivariate analysis with outliers, which is an important topic since outliers are not easily detected in multivariate datasets but can have serious impact on analysis results. In Chapter 14 , we briefly describe some general methods which are useful in multivariate analysis.

The focus of this book is on conceptual understanding of the models and methods for multivariate data, rather than tedious mathematical derivations or proofs. Extensive real data examples are presented in R. Students completing this course should be ready to perform statistical analysis of multivariate data from real world problems.

统计代写|多元统计分析代写Multivariate Statistical Analysis代考|The Basic Idea

In practice, a dataset may contain many variables, such as exam scores on algebra, calculus, geometry, violin, piano, and guitar. Some of these variables may be highly correlated, such as scores on violin, piano, and guitar. When analyzing such multivariate data, it is difficult to graphically display the data. Moreover, if we have to assume parametric distributions for these variables for statistical inference, there may be too many parameters to be estimated or there may be multi-collinearity problems. For example, if we assume a multivariate normal distribution for 6 variables, the covariance matrix will contain 21 parameters. That is, the covariance matrix will be of high dimension, so it is likely to be singular or ill behaved. When the sample size is not large, these parameters may be poorly estimated. Therefore, it is important to reduce the number of variables if the loss of information is not much. This dimension reduction is possible because, if some variables are highly correlated, they may be replaced by fewer new variables without much loss of information.

For example, if we have exam scores on six courses (variables): algebra, calculus, geometry, violin, piano, and guitar, we can use a new variable called mathematics skills to represent the first three variables and use another new variable called music skills to represent the last three variables. These two new variables, mathematics skills and music skills, retain most information in the original six variables. Moreover, these two new variables are uncorrelated and can be obtained by linear combinations of the original six variables. Therefore, we have reduced the number of variables from 6 to 2, without much loss of information. The scores of the original six variables can be converted scores of the two new variables, and we can plot the scores of the two new variables to check the normal assumption and possible outliers. That is the basic idea of dimension reduction (the dimension of the original data space is 6 , while the dimension of the new data space is 2 ), and the idea behind principal components analysis. The two new variables are called principal components.

Much information in the data or variables can be measured by the variability (or variance) of the data or variables. In other words, if the values of the data are all the same, there will be little information in the data. The basic idea of principal components analysis (PCA) is to explain the variability in the original set of correlated variables through a smaller set of uncorrelated new variables. These new variables are obtained by certain linear combinations of the original variables, and they are called the principal components $(P C s)$. That is, the goal of PCA is to reduce the number of original variables while maintain most of the information (variation) in the original data, i.e., it is a dimension-reduction method. Since the PCs are linear combinations of the original variables, normality and outliers in the original data should still be present in the “new data” of the PCs (called $P C$ scores), so we can check multivariate normality of the original data or check outliers in the original data based on the $\mathrm{PC}$ scores, which is easier since the new data have a lower dimension.

多元统计分析代考

统计代写|多元统计分析代写Multivariate Statistical Analysis代考|Data Analysis Strategies and Statistical Thinking

统计学的主要目标是从数据中学习,以便我们做出正确的决定并理解现实世界的问题。在数据分析中,统计学家最重要的技能是培养统计思维能力:如何获得好的数据,如何选择合适的方法来分析数据,如何解释分析结果并得出可靠的结论。培养这些技能需要时间,因为真正理解统计方法比人们想象的要难。统计思维与数学思维不同,因为数学通常涉及黑色或白色(即对或错),而统计可能涉及许多灰色区域,这些灰色区域可能不像对或错那么简单。因此,在统计学中,有时理解概念、模型、和方法,而不是进行数学推导或证明。统计学正在成为现代世界最重要的学科之一,因为现代世界几乎所有领域的许多重要决策都是基于数据中的信息,通过数据分析获得的。正如塞缪尔·威尔斯 (Samuel Wells) 所写的那样:“有朝一日,统计思维将成为有效公民的必要条件,就像读写能力一样”。

在数据分析中,最好遵循某些程序。这些程序是统计思维的反映。具体来说,一个好的统计分析应该包括以下步骤:

- 目标。

- 数据采集。

- 探索性分析。

- 验证性分析。

- 结果的解释。

- 结论。

在收集数据之前,我们应该明确研究目标,以便我们决定如何收集数据。一旦目标明确,下一步就是决定如何收集数据。获得好的数据是重要的一步,因为没有太多

统计学的主要目标是从数据中学习,以便我们做出正确的决定并理解现实世界的问题。在数据分析中,统计学家最重要的技能是培养统计思维能力:如何获得好的数据,如何选择合适的方法来分析数据,如何解释分析结果并得出可靠的结论。培养这些技能需要时间,因为真正理解统计方法比人们想象的要难。统计思维与数学思维不同,因为数学通常涉及黑色或白色(即对或错),而统计可能涉及许多灰色区域,这些灰色区域可能不像对或错那么简单。因此,在统计学中,有时理解概念、模型、和方法,而不是进行数学推导或证明。统计学正在成为现代世界最重要的学科之一,因为现代世界几乎所有领域的许多重要决策都是基于数据中的信息,通过数据分析获得的。正如塞缪尔·威尔斯 (Samuel Wells) 所写的那样:“有朝一日,统计思维将成为有效公民的必要条件,就像读写能力一样”。

在数据分析中,最好遵循某些程序。这些程序是统计思维的反映。具体来说,一个好的统计分析应该包括以下步骤:

- 目标。

- 数据采集。

- 探索性分析。

- 验证性分析。

- 结果的解释。

- 结论。

在收集数据之前,我们应该明确研究目标,以便我们决定如何收集数据。一旦目标明确,下一步就是决定如何收集数据。获得好的数据是重要的一步,因为如果数据收集得不好,就没有太多的统计数据可以做。通常有两种收集数据的方法:设计实验或观察研究(例如,抽样调查)。设计的实验通常涉及随机化,这使我们能够做出因果推断。抽样调查等观察性研究使我们能够找到关联。如今,海量数据的自动生成方式还有很多,例如来自互联网的数据和来自商业交易的记录。很好地理解数据是如何产生的,可以帮助我们从数据分析中得出可靠的结论。

统计代写|多元统计分析代写Multivariate Statistical Analysis代考|Outline

多元分析的主题可能相当广泛,因为涉及多个变量的许多统计模型和方法可以被视为一般意义上的多元分析。在一些经典教科书中,多元分析主要关注具有独立同分布数据的多元正态分布的模型和方法。这种关注允许理论发展,因为多元正态分布具有许多良好的属性并具有优雅的数学表达式。然而,在实践中,真实数据非常复杂。多元正态分布假设可能不适用于某些现实世界的问题。本书更侧重于如何分析来自现实世界问题的数据。在实践中,数据可能不服从正态分布,可能是离散的,也可能不是独立的。我们选择主题

这些是实践中最常用的。由于篇幅限制,一些在实践中可能也很重要的主题不得不省略。

第 2 章至第 4 章可视为对连续数据的探索性多元分析。目标是减少多变量数据的维度或对多变量观察进行分类。可能不需要分布假设,尽管在某些情况下我们可能会假设多元正态分布进行推理。第 5 章考虑多元正态分布的统计推断,包括平均向量和协方差矩阵的假设检验,这通常是经典教科书中的主要焦点。第 6 章回顾了多元离散数据的方法,包括列联表的推断。第 7 章简要介绍了 Copula 模型,它是非正态多元数据的有用模型。第 8 章到第 10 章简要回顾了线性和广义线性回归模型,MANOVA 模型被视为特殊的线性模型。第 11 章展示了相关数据的模型,特别是重复测量和纵向数据的模型。在第 12 章中,我们将讨论如何处理多元数据集中的缺失数据,因为缺失数据在实践中非常普遍,并且它们会对分析结果产生重大影响。第 13 章简要介绍了使用异常值进行多元分析的稳健方法,这是一个重要的主题,因为在多元数据集中不易检测到异常值,但会对分析结果产生严重影响。在第 14 章中,我们简要描述了一些在多元分析中有用的通用方法。我们讨论了如何处理多元数据集中的缺失数据,因为缺失数据在实践中非常普遍,并且它们会对分析结果产生重大影响。第 13 章简要介绍了使用异常值进行多元分析的稳健方法,这是一个重要的主题,因为在多元数据集中不易检测到异常值,但会对分析结果产生严重影响。在第 14 章中,我们简要描述了一些在多元分析中有用的通用方法。我们讨论了如何处理多元数据集中的缺失数据,因为缺失数据在实践中非常普遍,并且它们会对分析结果产生重大影响。第 13 章简要介绍了使用异常值进行多元分析的稳健方法,这是一个重要的主题,因为在多元数据集中不易检测到异常值,但会对分析结果产生严重影响。在第 14 章中,我们简要描述了一些在多元分析中有用的通用方法。

本书的重点是对多元数据模型和方法的概念理解,而不是繁琐的数学推导或证明。R 中提供了广泛的真实数据示例。完成本课程的学生应该准备好对来自现实世界问题的多元数据进行统计分析。

统计代写|多元统计分析代写Multivariate Statistical Analysis代考|The Basic Idea

在实践中,数据集可能包含许多变量,例如代数、微积分、几何、小提琴、钢琴和吉他的考试成绩。其中一些变量可能高度相关,例如小提琴、钢琴和吉他的乐谱。在分析此类多变量数据时,很难以图形方式显示数据。此外,如果我们必须假设这些变量的参数分布以进行统计推断,则可能需要估计的参数太多,或者可能存在多重共线性问题。例如,如果我们假设 6 个变量的多元正态分布,则协方差矩阵将包含 21 个参数。也就是说,协方差矩阵将是高维的,因此它很可能是奇异的或表现不佳的。当样本量不大时,这些参数的估计可能会很差。所以,如果信息丢失不多,减少变量的数量很重要。这种降维是可能的,因为如果某些变量高度相关,它们可能会被更少的新变量替换而不会丢失太多信息。

例如,如果我们有六门课程(变量)的考试成绩:代数、微积分、几何、小提琴、钢琴和吉他,我们可以使用一个名为数学技能的新变量来表示前三个变量,并使用另一个名为音乐的新变量表示最后三个变量的技能。这两个新变量,数学技能和音乐技能,保留了原来六个变量中的大部分信息。而且,这两个新变量是不相关的,可以通过原六个变量的线性组合得到。因此,我们将变量的数量从 6 个减少到 2 个,而不会丢失太多信息。原来的六个变量的得分可以转换成两个新变量的得分,我们可以绘制两个新变量的得分来检查正常假设和可能的异常值。这就是降维的基本思想(原始数据空间的维度为 6 ,而新数据空间的维度为 2 ),以及主成分分析背后的思想。这两个新变量称为主成分。

数据或变量中的许多信息可以通过数据或变量的可变性(或方差)来衡量。换句话说,如果数据的值都相同,则数据中的信息将很少。主成分分析(PCA)的基本思想是通过较小的一组不相关的新变量来解释原始相关变量集的可变性。这些新变量是通过对原变量进行一定的线性组合得到的,称为主成分(磷Cs). 即PCA的目标是减少原始变量的数量,同时保留原始数据中的大部分信息(变化),即它是一种降维方法。由于 PC 是原始变量的线性组合,因此原始数据中的正态性和异常值仍应存在于 PC 的“新数据”中(称为磷C分数),因此我们可以检查原始数据的多元正态性或检查原始数据中的异常值磷C分数,这更容易,因为新数据的维度较低。

统计代写请认准statistics-lab™. statistics-lab™为您的留学生涯保驾护航。

金融工程代写

金融工程是使用数学技术来解决金融问题。金融工程使用计算机科学、统计学、经济学和应用数学领域的工具和知识来解决当前的金融问题,以及设计新的和创新的金融产品。

非参数统计代写

非参数统计指的是一种统计方法,其中不假设数据来自于由少数参数决定的规定模型;这种模型的例子包括正态分布模型和线性回归模型。

广义线性模型代考

广义线性模型(GLM)归属统计学领域,是一种应用灵活的线性回归模型。该模型允许因变量的偏差分布有除了正态分布之外的其它分布。

术语 广义线性模型(GLM)通常是指给定连续和/或分类预测因素的连续响应变量的常规线性回归模型。它包括多元线性回归,以及方差分析和方差分析(仅含固定效应)。

有限元方法代写

有限元方法(FEM)是一种流行的方法,用于数值解决工程和数学建模中出现的微分方程。典型的问题领域包括结构分析、传热、流体流动、质量运输和电磁势等传统领域。

有限元是一种通用的数值方法,用于解决两个或三个空间变量的偏微分方程(即一些边界值问题)。为了解决一个问题,有限元将一个大系统细分为更小、更简单的部分,称为有限元。这是通过在空间维度上的特定空间离散化来实现的,它是通过构建对象的网格来实现的:用于求解的数值域,它有有限数量的点。边界值问题的有限元方法表述最终导致一个代数方程组。该方法在域上对未知函数进行逼近。[1] 然后将模拟这些有限元的简单方程组合成一个更大的方程系统,以模拟整个问题。然后,有限元通过变化微积分使相关的误差函数最小化来逼近一个解决方案。

tatistics-lab作为专业的留学生服务机构,多年来已为美国、英国、加拿大、澳洲等留学热门地的学生提供专业的学术服务,包括但不限于Essay代写,Assignment代写,Dissertation代写,Report代写,小组作业代写,Proposal代写,Paper代写,Presentation代写,计算机作业代写,论文修改和润色,网课代做,exam代考等等。写作范围涵盖高中,本科,研究生等海外留学全阶段,辐射金融,经济学,会计学,审计学,管理学等全球99%专业科目。写作团队既有专业英语母语作者,也有海外名校硕博留学生,每位写作老师都拥有过硬的语言能力,专业的学科背景和学术写作经验。我们承诺100%原创,100%专业,100%准时,100%满意。

随机分析代写

随机微积分是数学的一个分支,对随机过程进行操作。它允许为随机过程的积分定义一个关于随机过程的一致的积分理论。这个领域是由日本数学家伊藤清在第二次世界大战期间创建并开始的。

时间序列分析代写

随机过程,是依赖于参数的一组随机变量的全体,参数通常是时间。 随机变量是随机现象的数量表现,其时间序列是一组按照时间发生先后顺序进行排列的数据点序列。通常一组时间序列的时间间隔为一恒定值(如1秒,5分钟,12小时,7天,1年),因此时间序列可以作为离散时间数据进行分析处理。研究时间序列数据的意义在于现实中,往往需要研究某个事物其随时间发展变化的规律。这就需要通过研究该事物过去发展的历史记录,以得到其自身发展的规律。

回归分析代写

多元回归分析渐进(Multiple Regression Analysis Asymptotics)属于计量经济学领域,主要是一种数学上的统计分析方法,可以分析复杂情况下各影响因素的数学关系,在自然科学、社会和经济学等多个领域内应用广泛。

MATLAB代写

MATLAB 是一种用于技术计算的高性能语言。它将计算、可视化和编程集成在一个易于使用的环境中,其中问题和解决方案以熟悉的数学符号表示。典型用途包括:数学和计算算法开发建模、仿真和原型制作数据分析、探索和可视化科学和工程图形应用程序开发,包括图形用户界面构建MATLAB 是一个交互式系统,其基本数据元素是一个不需要维度的数组。这使您可以解决许多技术计算问题,尤其是那些具有矩阵和向量公式的问题,而只需用 C 或 Fortran 等标量非交互式语言编写程序所需的时间的一小部分。MATLAB 名称代表矩阵实验室。MATLAB 最初的编写目的是提供对由 LINPACK 和 EISPACK 项目开发的矩阵软件的轻松访问,这两个项目共同代表了矩阵计算软件的最新技术。MATLAB 经过多年的发展,得到了许多用户的投入。在大学环境中,它是数学、工程和科学入门和高级课程的标准教学工具。在工业领域,MATLAB 是高效研究、开发和分析的首选工具。MATLAB 具有一系列称为工具箱的特定于应用程序的解决方案。对于大多数 MATLAB 用户来说非常重要,工具箱允许您学习和应用专业技术。工具箱是 MATLAB 函数(M 文件)的综合集合,可扩展 MATLAB 环境以解决特定类别的问题。可用工具箱的领域包括信号处理、控制系统、神经网络、模糊逻辑、小波、仿真等。